LEVEL-0: APPRENTICE

Basics of C++

Introduction to C++

INTRODUCTION TO PROGRAMMING LANGUAGES:

A program is a set of instructions that tells a computer what to do in order to come up with a solution to a particular problem. Programs are written using a programming language.A programming language is a formal language designed to communicate instructions to a computer. There are two major types of programming languages: low-level languages and high-level languages.

TYPES OF LANGUAGES:

Low-Level Languages : Low-level languages are referred to as 'low' because they are very close to how different hardware elements of a computer actually communicate with each other. Low-level languages are machine oriented and require extensive knowledge of computer hardware and its configuration. There are two categories of low-level languages: machine language and assembly language.

Machine language : or machine code, is the only language that is directly understood by the computer, and it does not need to be translated. All instructions use binary notation and are written as a string of 1s and 0s. A program instruction in machine language may look something like this:

However, binary notation is very difficult for humans to understand. This is where assembly languages come in.

An assembly language : is the first step to improve programming structure and make machine language more readable by humans. An assembly language consists of a set of symbols and letters. A translator is required to translate the assembly language to machine language called the 'assembler.' While easier than machine code, assembly languages are still pretty difficult to understand. This is why high-level languages have been developed.

High-Level Languages : A high-level language is a programming language that uses English and mathematical symbols, like +, -, % and many others, in its instructions. When using the term 'programming languages,' most people are actually referring to high-level languages. High-level languages are the languages most often used by programmers to write programs. Examples of high-level languages are C++, Fortran, Java and Python. Learning a high-level language is not unlike learning another human language - you need to learn vocabulary and grammar so you can make sentences. To learn a programming language, you need to learn commands, syntax and logic, which correspond closely to vocabulary and grammar. The code of most high-level languages is portable and the same code can run on different hardware without modification. Both machine code and assembly languages are hardware specific which means that the machine code used to run a program on one specific computer needs to be modified to run on another computer. A high-level language cannot be understood directly by a computer, and it needs to be translated into machine code. There are two ways to do this, and they are related to how the program is executed: a high-level language can be compiled or interpreted.

COMPILER VS INTERPRETER

A compiler is a computer program that translates a program written in a high-level language to the machine language of a computer.

The high-level program is referred to as 'the source code.' The compiler is used to translate source code into machine code or compiled code. This does not yet use any of the input data. When the compiled code is executed, referred to as 'running the program,' the program processes the input data to produce the desired output.

An interpreter is a computer program that directly executes instructions written in a programming language, without requiring them previously to have been compiled into a machine language program.

HOW TO START WRITING PROGRAMS?

Algorithm:

Algorithm is a step-by-step procedure, which defines a set of instructions to be executed in a certain order to get the desired output. Algorithms are generally created independent of underlying languages, i.e. an algorithm can be implemented in more than one programming language.

Qualities of a good algorithm

1. Input and output should be defined precisely.

2. Each step in the algorithm should be clear and unambiguous.

3. An algorithm shouldn’t include computer codes. Instead it should be written in such a way that it can be used in different programming languages. Good, logical programming is developed through good pre-code planning and organization. This is assisted by the use of pseudocode and program flowcharts.

Flowcharts:

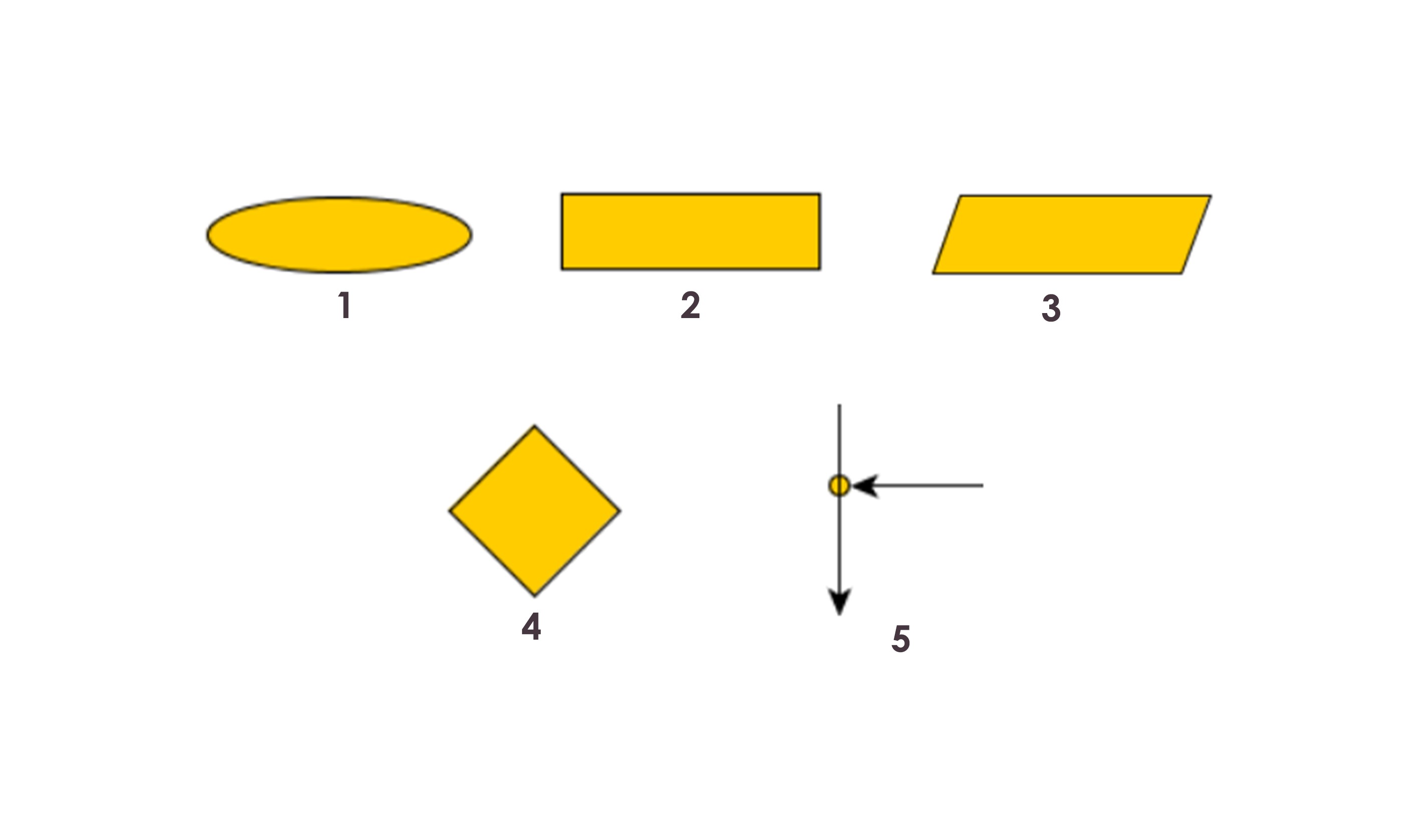

Flowcharts are written with program flow from the top of a page to the bottom. Each command is placed in a box of the appropriate shape, and arrows are used to direct program flow.

1.The first figure is oval. Also could be met as an “ellipse”, ”circle”, but it has the same meaning. This is the first and the last symbol in every flow chart. I like to use ellipses for “begin” and “end”. When I divide an algorithm in several parts I use small circles for the start/end of each part.

2.You will use a rectangle to perform an action (also called "statement"). More precisely, we use them for assignment statements - when you change a value of a variable.

3.The parallelogram flowchart symbol serves for input/output(I/O) to/from the program.

4.This is what we use when our program has to make a decision. This is the only block that has more than one exit arrow. The rhombus symbol has one(or several) entrance points and exactly two outputs.

5.Junction flow chart symbol : You can connect two arrows with a junction

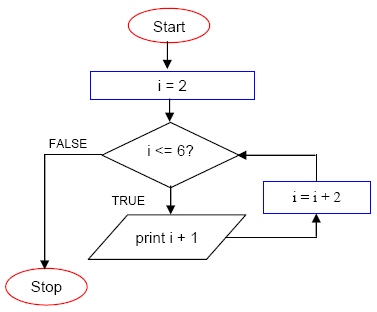

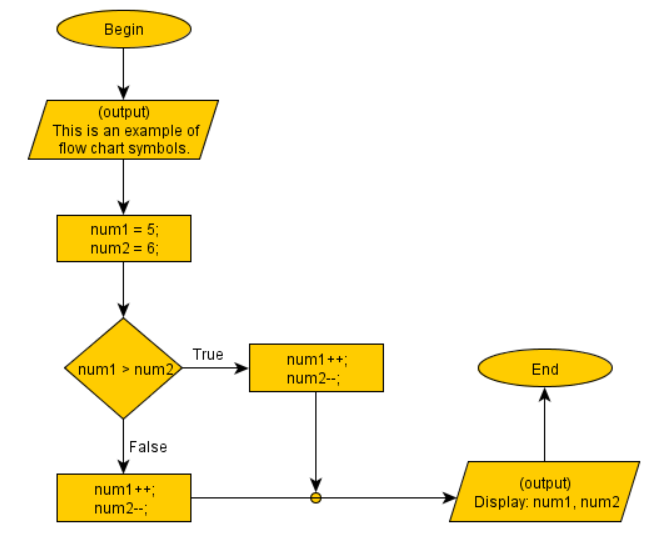

Here are examples of a short algorithm, using each flowcharts

Datatypes

VARIABLES

A variable is a container (storage area) used to hold data.

Each variable should be given a unique name (identifier).

int a=2;

Here a is the variable name that holds the integer value 2.

The value of a can be changed, hence the name variable.

There are certain rules for naming a variable in C++

1. Can only have alphabets, numbers and underscore.

2. Cannot begin with a number.

3. Cannot begin with an uppercase character.

4. Cannot be a keyword defined in C++ language (like int is a keyword).

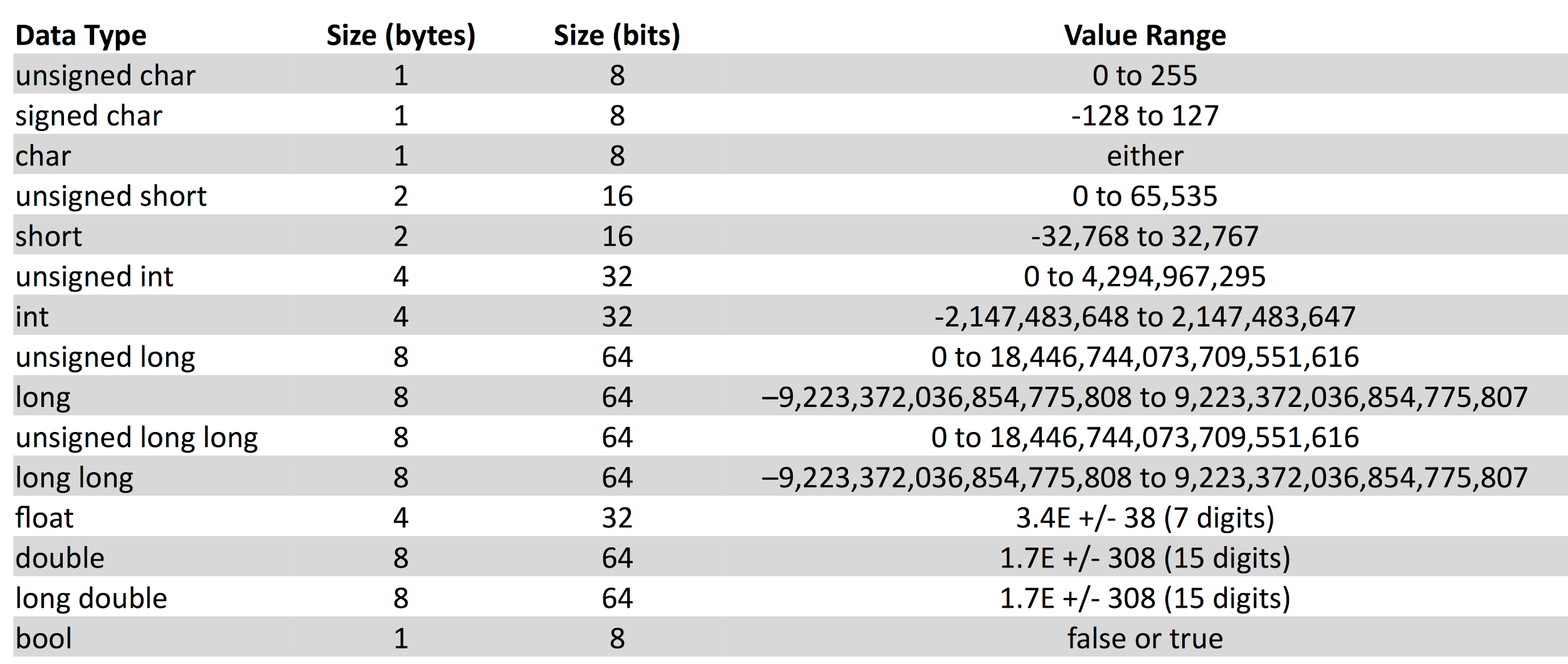

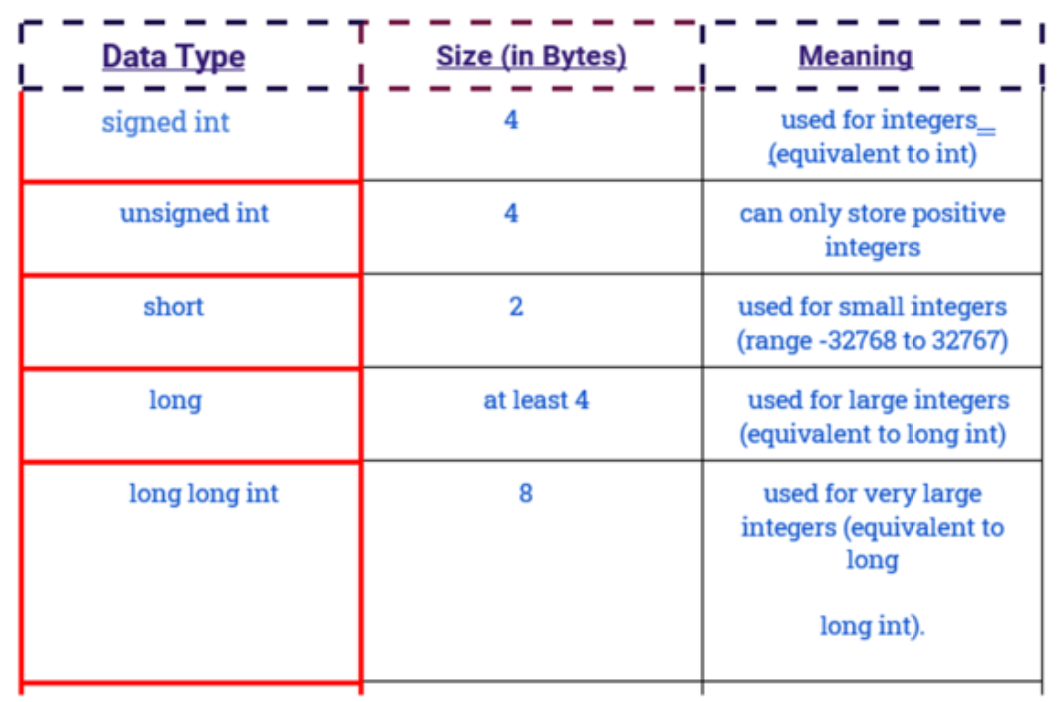

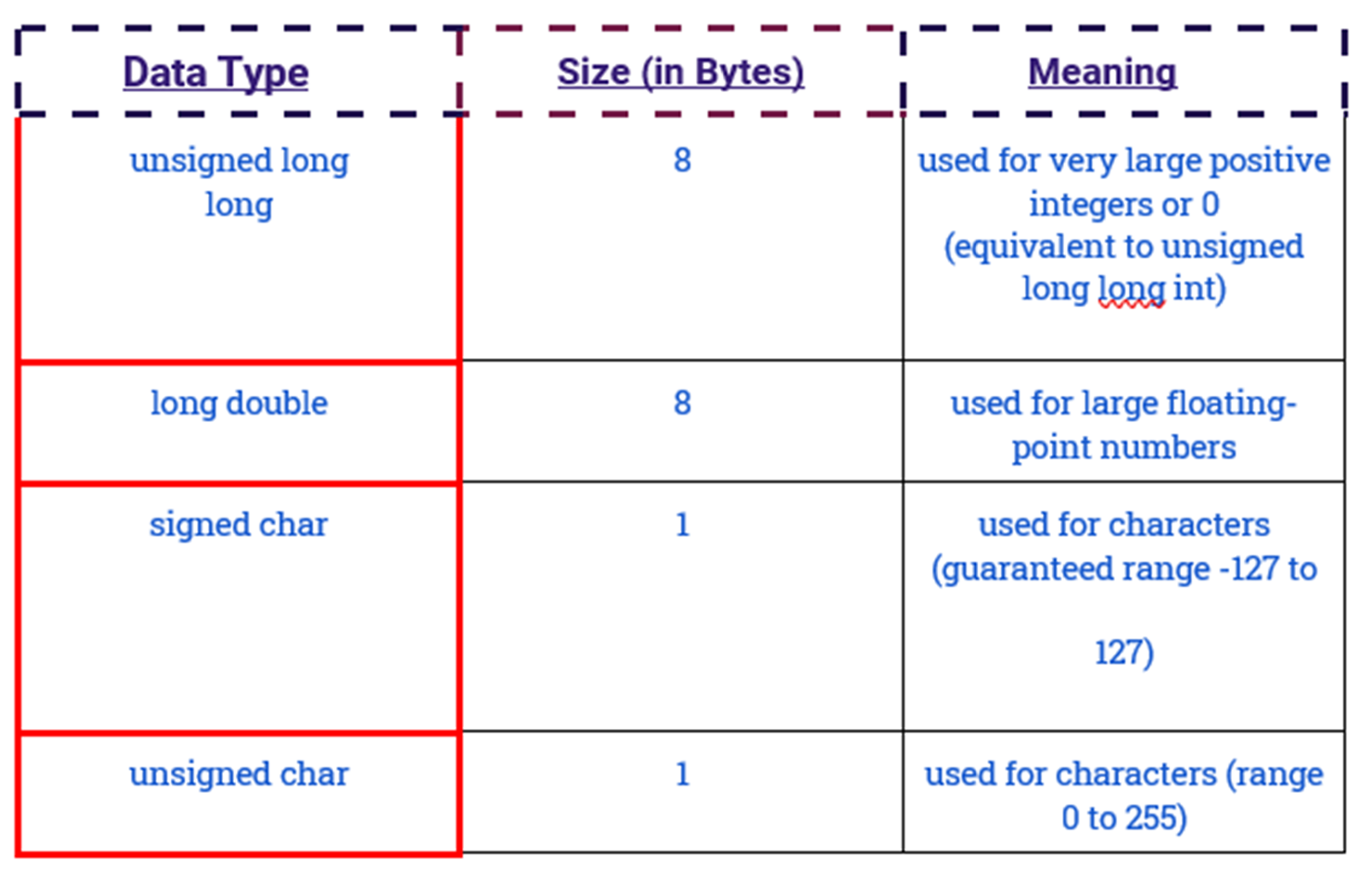

FUNDAMENTAL DATATYPES IN C++ :

Data types are declarations for variables. This determines the type and size of

data associated with variables which is essential to know since different data

types occupy different size of memory.

1. int:

● This data type is used to store integers.

● It occupies 4 bytes in memory.

● It can store values from -2147483648 to 2147483647.

Eg. int age = 18

2. float and double:

● Used to store floating-point numbers (decimals and exponentials)

● Size of float is 4 bytes and size of double is 8 bytes.

● Float is used to store upto 7 decimal digits whereas double is used to store upto 15 decimal digits.

Eg. float pi = 3.14

double distance = 24E8 // 24 x 108

3. char:

● This data type is used to store characters.

● It occupies 1 byte in memory.

● Characters in C++ are enclosed inside single quotes ͚ ͚.

● ASCII code is used to store characters in memory.

Eg͘ char ch с ͚a͖͛

4. bool

● This data type has only 2 values ʹ true and false.

● It occupies 1 byte in memory.

● True is represented as 1 and false as 0.

Eg. bool flag = false

C++ TYPE MODIFIERS

Type modifiers are used to modify the fundamental data types.

DERIVED DATATYPES:

These are the data types that are derived from fundamental (or built-in) data

types. For example, arrays, pointers, function, reference.

USER-DEFINED DATATYPES:

These are the data types that are defined by user itself.For example, class, structure, union, enumeration, etc.

Input/Output

1. Comments

The two slash(//) signs are used to add comments in a program. It does not have any effect on the behavior or outcome of the program. It is used to give a description of the program you’re writing.

2. #include<iostream>

#include is the pre-processor directive that is used to include files in our program. Here we are including the iostream standard file which is necessary for the declarations of basic standard input/output library in C++.

3. Using namespace std

All elements of the standard C++ library are declared within namespace. Here we are using std namespace.

4. int main()

The execution of any C++ program starts with the main function, hence it is necessary to have a main function in your program. ‘int’ is the return value of this function. (We will be studying about functions in more detail later).

5. {}

The curly brackets are used to indicate the starting and ending point of any function. Every opening bracket should have a corresponding closing Bracket.

6. Cout<< ”Hello World!\n”;

This is a C++ statement. cout represents the standard output stream in C++. It is declared in the iostream standard file within the std namespace. The text between quotations will be printed on the screen. \n will not be printed, it is used to add line break. Each statement in C++ ends with a semicolon (;)

7. return 0;

return signifies the end of a function. Here the function is main, so when we hit return 0, it exits the program. We are returning 0 because we mentioned the return type of main function as integer (int main). A zero indicates that everything went fine and a one indicates that something has gone wrong.

// Hello world program in C++

#include<iostream>

using namespace std;

int main()

{

cout << "Hello World!\n";

return 0;

}

Operators in C++

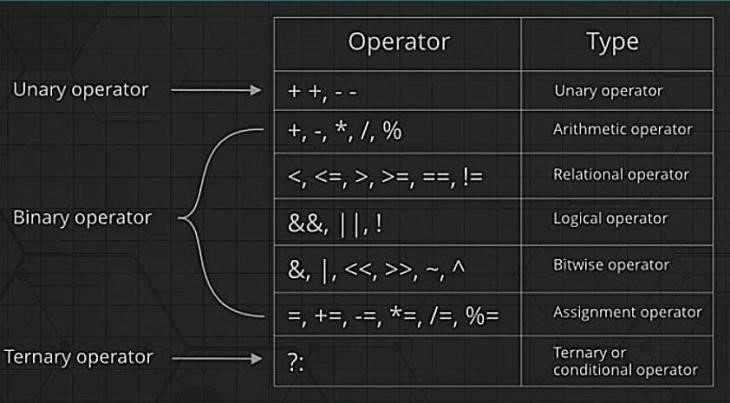

Operators are nothing but symbols that tell the compiler to perform some specific operations. Operators are of the following types -

1. Arithmetic Operators

Arithmetic operators perform some arithmetic operation on one or two operands. Operators that operate on one operand are called unary arithmetic operators and operators that operate on two operands are called binary arithmetic operators. +,-,*,/,% are binary operators. ++, -- are unary operators.

Suppose : A=5 and B=10

Operator Operation Example

+ Adds two operands A+B = 15

- Subtracts right operand from left operand B-A = 5

* Multiplies two operands A*B = 50

/ Divides left operand by right operand B/A = 2

% Finds the remainder after integer division B%A = 0

Pre-incrementer : It increments the value of the operand instantly.

Post-incrementer : It stores the current value of the operand temporarily and only after that statement is completed, the value of the operand is incremented.

Pre-decrementer : It decrements the value of the operand instantly.

Post-decrementer : It stores the current value of the operand temporarily and only after that statement is completed, the value of the operand is decremented.

int a=10;

int b;

b = a++;

cout<<a<<" "<<b<<endl;

Output : 11 10

int a=10;

int b;

b = ++a;

cout<<a<<" "<<b<<endl;

Output : 11 11

2. Relational Operators

Relational operators define the relation between 2 entities. They give a boolean value as result i.e true or false.

Suppose : A=5 and B=10

Operator Operation Example

== Gives true if two operands are equal A==B is not true

!= Gives true if two operands are not equal A!=B is true

> Gives true if left operand is more than right operand A>B is not true

< Gives true if left operand is less than right operand A<B is true

>= Gives true if left operand is more than right operand or equal to it A>=B is not true

<= Gives true if left operand is more than right operand or equal to it A<=B is true

int n;

cin>>n;

if(n<10){

cout<<"Less than 10"<<endl;

}

else if(n==10){

cout<<"Equal to 10"<<endl;

}

else{

cout<<"More than 10"<<endl;

}

Example -

We need to write a program which prints if a number is more than 10, equal to 10 or less than 10. This could be done using relational operators with if else statements.

3. Logical Operators

Logical operators are used to connect multiple expressions or conditions together.

We have 3 basic logical operators.

Suppose : A=0 and B=1

&& AND operator. Gives true if both operands are non-zero.(A && B) is false

|| OR operator. Gives true if atleast one of the two operands are non-zero.(A || B) is true

! NOT operator. Reverse the logical state of operand !A is true

Example -

If we need to check whether a number is divisible by both 2 and 3, we will use AND operator

(num%2==0) && num(num%3==0)

If this expression gives true value then that means that num is divisible by both 2 and 3.

(num%2==0) || (num%3==0)

If this expression gives true value then that means that num is divisible by 2 or 3 or both.

4. Bitwise Operators

Bitwise operators are the operators that operate on bits and perform bit-by-bit operations.

Suppose : A=5(0101) and B=6(0110)

& Binary AND. Copies a bit to the result if it exists in both operands.

0101 & 0110 => 0100

| Binary OR. Copies a bit if it exists in either operand.

0101 | 0110 => 0111

^ Binary XOR. Copies the bit if it is set in one operand but not both.

0101^ 0110 => 0011

~ Binary Ones Complement. Flips the bit.

~0101 =>1010

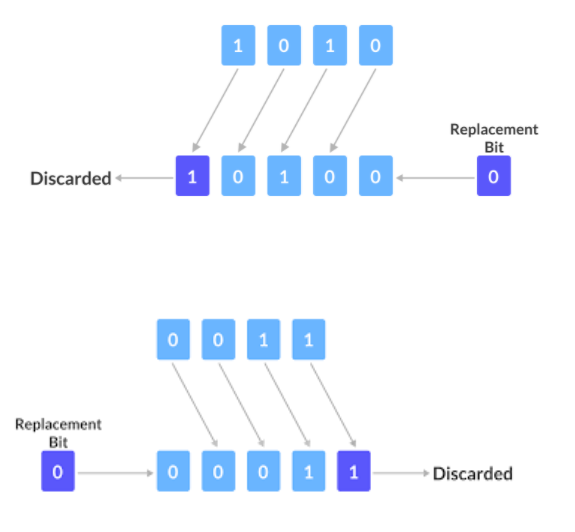

<< Binary Left Shift. Shifts left by the number of places specified by the right operand.

4 (0100)

4<<1=1000 = 8

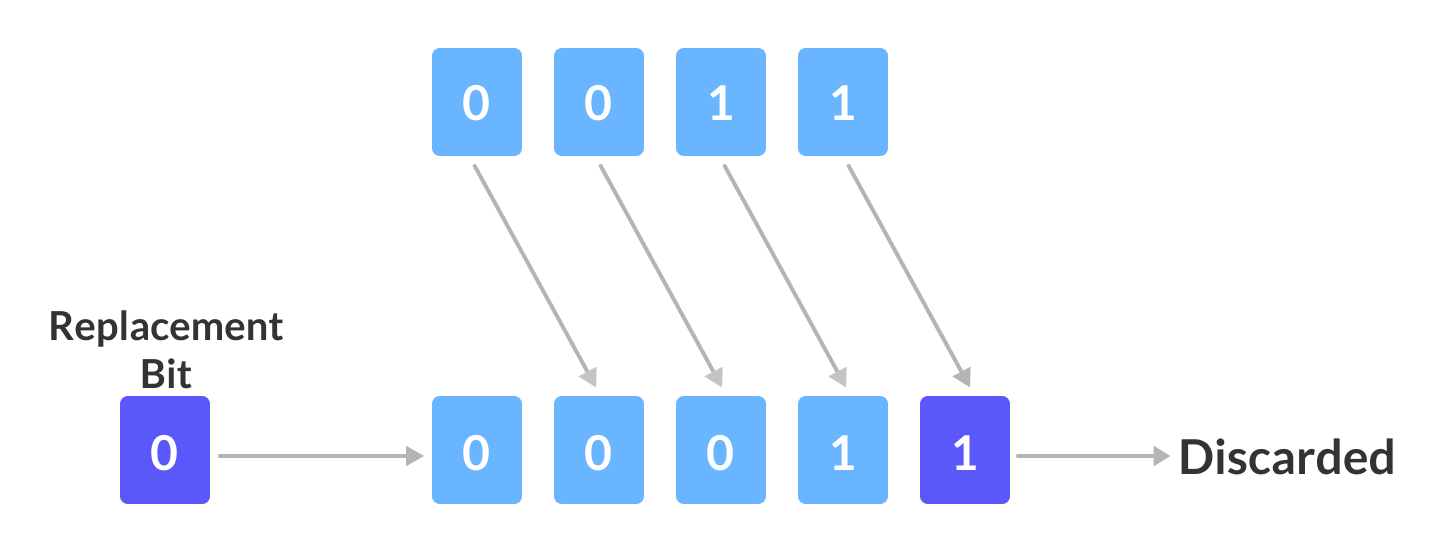

>> Binary Right Shift. Shifts right by the number of places specified by the right operand.

4>>1=0010 = 2

If shift operator is applied on a number N then,

x N<<a will give a result N*2^a

x N>>a will give a result N/2^a

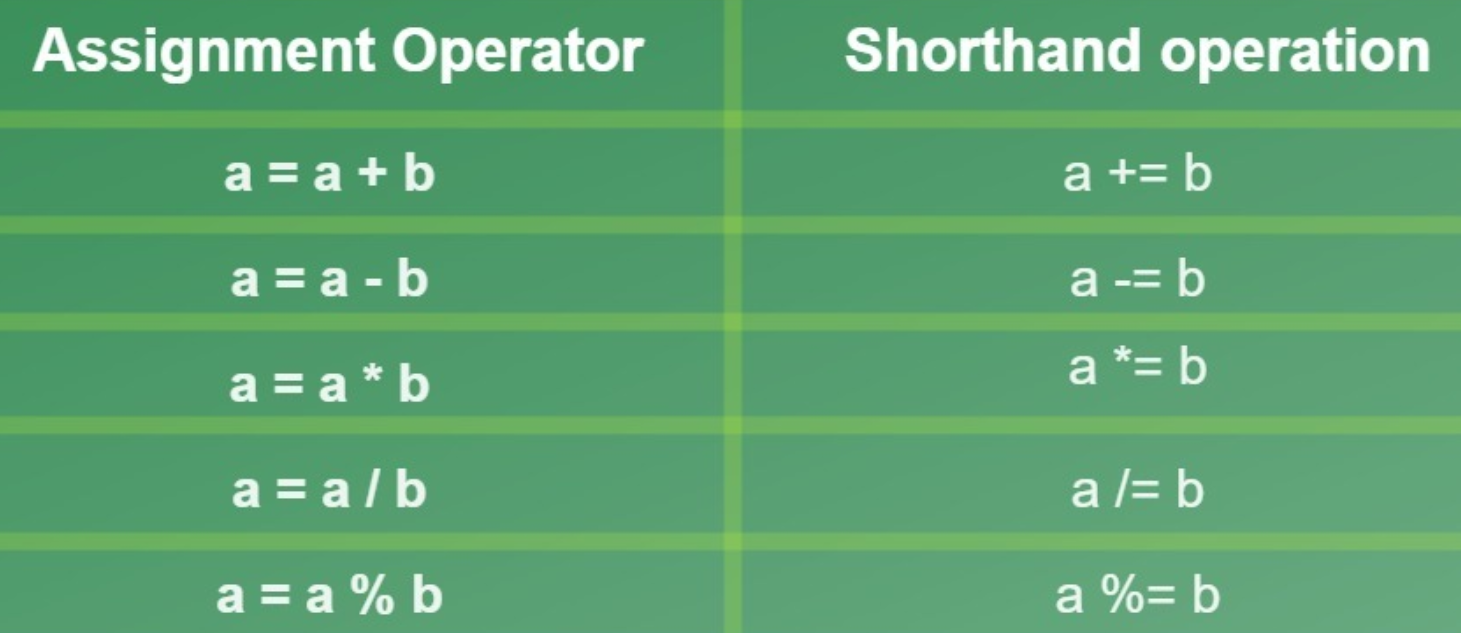

5. Assignment Operators

= Assigns value of right operand to left operand A=B will put value of B in A

+= Adds right operand to the left operand and assigns the result to left operand.

A+=B means A = A+B

-= Subtracts right operand from the left operand and assigns the result to left operand.

A-=B means A=A-B

*= Multiplies right operand with the left operand and assigns the result to left operand.

A*=B means A=A*B

/= Divides left operand with the right operand and assigns the result to left operand.

A/=B means A=A/B

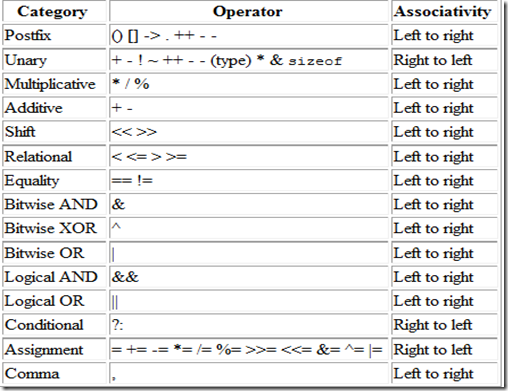

⦿ PRECEDENCE OF OPERATORS

Decision Making

#include <iostream>

using namespace std ;

int main () {

int age ;

cin >> age ;

if ( age >= 18 ) {

cout << "You can vote." ;

}

else {

cout << "Not eligible for voting." ;

}

return 0 ;

}

IF-ELSE

The if block is used to specify the code to be executed if the condition specified

in it is true, the else block is executed otherwise.

#include <iostream>

using namespace std ;

int main () {

int x,y ;

cin >> x >> y ;

if ( x == y ) {

cout << "Both the numbers are equal" ;

}

else if ( x > y ) {

cout << "X is greater than Y" ;

}

else {

cout << "Y is greater than X" ;

}

return 0 ;

}

ELSE-IF

To specify multiple if conditions, we first use if and then the consecutive

statements use else if.

#include <iostream>

using namespace std ;

int main () {

int x,y ;

cin >> x >> y ;

if ( x == y ) {

cout << "Both the numbers are equal" ;

}

else {

if ( x > y ) {

cout << "X is greater than Y" ;

}

else {

cout << "Y is greater than X" ;

}

}

return 0 ;

}

NESTED-IF

To specify conditions within conditions we make the use of nested ifs.

Loops in C++

#include<iostream>

using namespace std;

int main(){

for(int i=1;i<=5;i++){

cout<<i<<" ";

}

return 0;

}

1. for loop:

The syntax of the for loop is

for (initialization; condition; update) {

// body of-loop

}

The for loop is initialized by the value 1, the test condition is i<=5 i.e the loop is

executed till the value of i remains lesser than or equal to 5. In each iteration

the value of i is incremented by one by doing i++.

#include<iostream>

using namespace std;

int main(){

int i=1;

while(i<=5){

cout<<i<<" ";

i++;

}

return 0;

}

2. while loop

The syntax for while loop is

while (condition) {

// body of the loop

}

The while loop is initialized by the value 1, the test condition is i<=5 i.e the loop

is executed till the value of i remains lesser than or equal to 5. In each iteration

the value of i is incremented by one by doing i++.

#include<iostream>

using namespace std;

int main(){

int i=1;

do

{

cout<<i<<" ";

i++;

} while (i<=5);

return 0;

}

3. do͙ while loop

The syntax for while loop is

do {

// body of loop;

}

while (condition);

The do while loop variable is initialized by the value 1, in each iteration the

value of i is incremented by one by doing i++, the test condition is i<=5 i.e the

loop is executed till the value of i remains lesser than or equal to 5. Since the

testing condition is checked only once the loop has already run so a do while

loop runs at least once.

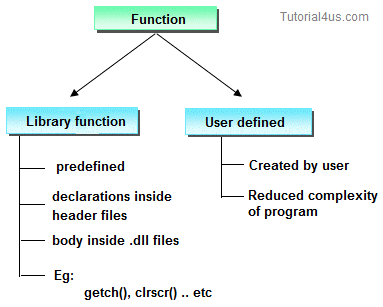

Functions

Why are functions used?

x If some functionality is performed at multiple places in software, then rather than writing the same code, again and again, we create a function and call it everywhere. This helps reduce code redundancy. x Functions make maintenance of code easy as we have to change at one place if we make future changes to the functionality. x Functions make the code more readable and easy to understand.

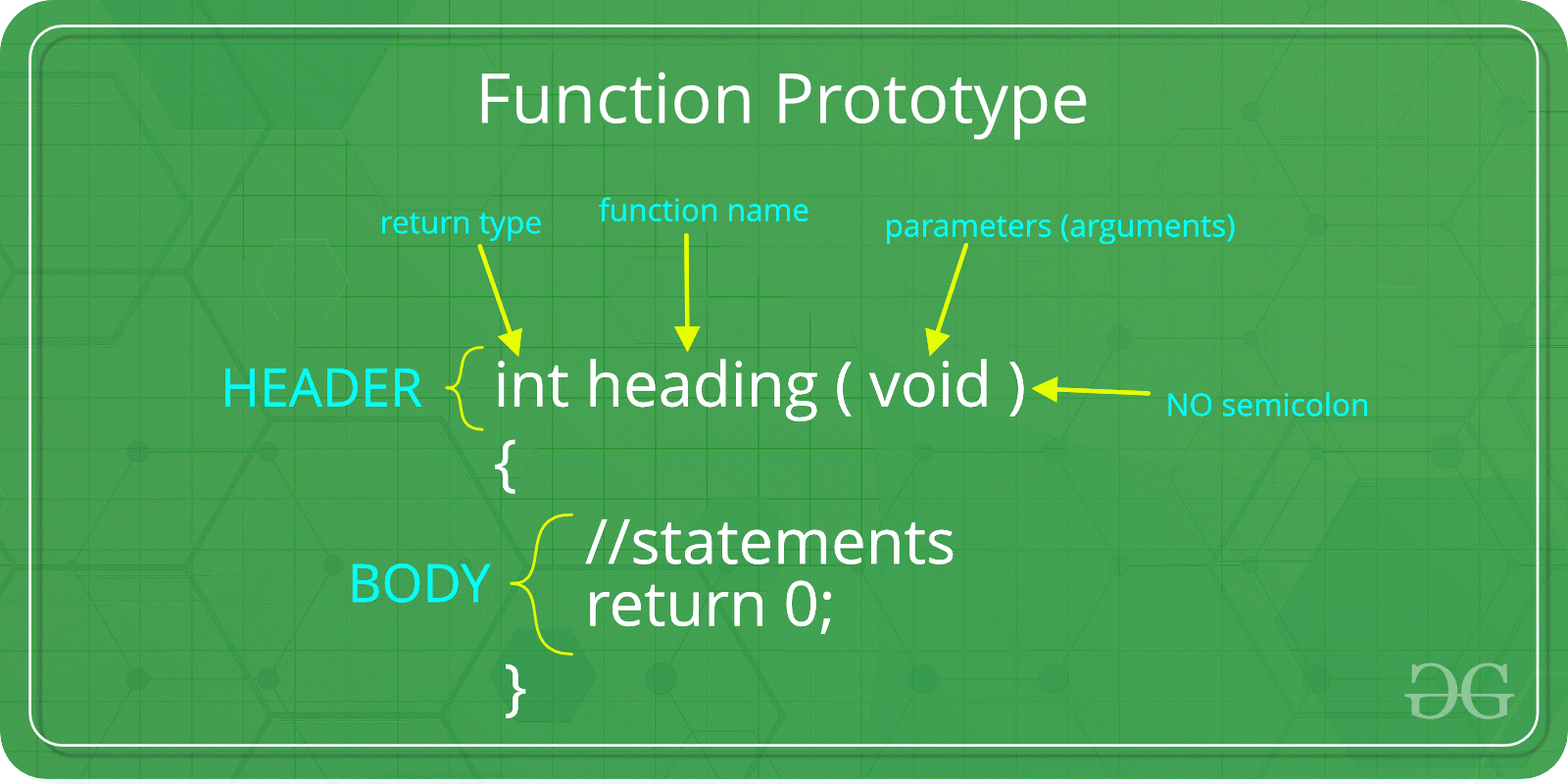

The syntax for function declaration is-

return-type function_name (parameter 1, parameterϮ ...... parameter n){

//function_body

}

return-type

The return type of a function is the data type of the variable that that function returns.

For eg. if we write a function that adds 2 integers and returns their sum then the return type of this function will be ‘int’ as we will returning sum that is an integer value.

When a function does not return any value, in that case the return type of the function is ‘void’.

function_name

It is the unique name of that function. It is always recommended to declare a function before it is used.

Parameters

A function can take some parameters as inputs. These parameters are specified along with their datatypes.

For eg. if we are writing a function to add 2 integers, the parameters would be passed like – int add (int num1, int num2)

Main function:

The main function is a special function as the computer starts running the code from the beginning of the main function. Main function serves as the entry point for the program.

Mathematics

Modular Arithmetic

MODULAR ARITHMETIC

You must have stumbled across problems where you see a large number like 10^9+7 and you tend to skip these ones. After reading this section, handling these would become a cakewalk for you. Let’s start with some basics of modular arithmetic.

INTRODUCTION

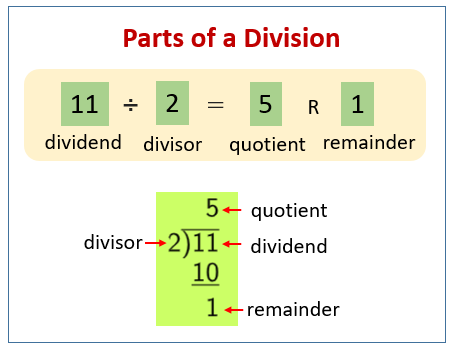

When we divide two integers, we get a quotient Q and a remainder R. To get remainder in programming, we use the modulo operator(%).

Examples:

A % M =R 11 % 2 = 1

13 % 7 = 6

21 % 3 = 0

PROPERTIES

Here are some rules to be followed for performing Modular calculations:

Addition:

(a +b) % m = (a % m + b % m) % m

(6 +8) % 5 = (6 % 5 + 8 % 5) % 5

= (1 + 3) % 5

= 4 % 5

= 4

__________________________________

Subtraction:

(a - b) % m =(a % m - b % m + m) % m

Here, m is added to make the result positive.

(6 - 8) % 5 = (6 % 5 - 8 % 5 +5) % 5

= (1 -3 + 5) % 5 = 3 % 5 = 3

__________________________________

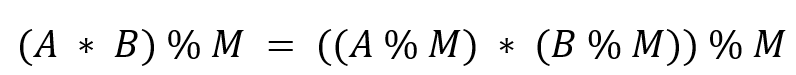

Multiplication:

(a * b) % m = ((a % m) * (b % m)) % m

(6 * 8) % 5 = ((6 % 5) * (8 % 5)) % 5

= (1 * 3) % 5

= 3 % 5

= 3

__________________________________

Division:

Modular division is totally different from modular addition, subtraction and multiplication. It also does not exist always.

(a / b) % m is not equal to ((a % m) / (b % m)) % m

(a / b) % m = ((a % m) * (b^(-1) % m)) % m

Note: b^(-1)is the multiplicative modulo inverse of b and m.

__________________________________

Modular multiplicative inverse:

What is modular multiplicative inverse? If you have two numbers A and M, you are required to find B such it that satisfies the following equation:

(A.B)%M=1

Here B is the modular multiplicative inverse of A under modulo M.

Formally, if you have two integers A and M, B is said to be modular multiplicative inverse of A under modulo M if it satisfies the following equation:

\

A.B≡1(modM) where B is in the range [1,M-1]

Why is B in the range [1,M-1]?

Since we have B%M, the inverse must be in the range [0,M-1]. However, since 0 is invalid, the inverse must be in the range [1,M-1]. An inverse exists only when A and M are coprime i.e. GCD( A ,M )=1.

int modInverse(int A,int M)

{

A=A % M;

for(int B=1;B<M;B++)

if((A*B) % M)==1)

return B;

}

Approach 1 (basic approach)

Time Complexity :

The algorithm mentioned above runs in O(M).

int modInverse(int A,int M)

{

return modularExponentiation(A,M-2,M);

}

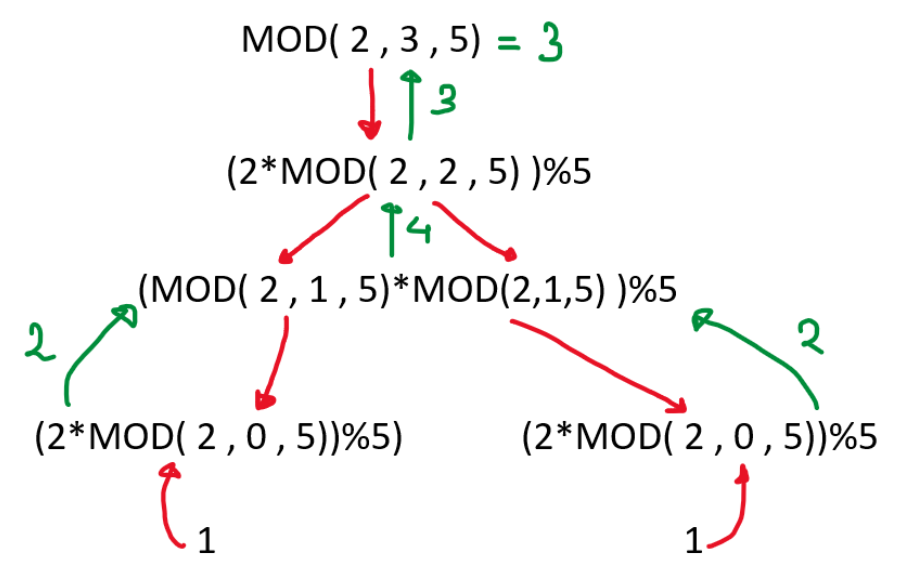

Approach 2 (used only when M is prime)

This approach uses Fermat's Little Theorem.

The theorem specifies the following: ((A)^(M-1)) % M =1

We can divide both sides by A to get : (A^(M-2)) % M = A^(-1)

Yes, we just need A^(M-2). It can be easily calculated by modular exponentiation:

Time complexity:

O(log M)

Study Material for Modular Arithmetic

Inclusion-Exclusion

According to inclusion-exclusion principle, to count unique ways for doing a task, the number of ways to do it in one way (n1) and number of ways to do it in some other way (n2) should be added, and the number of ways that are common to both set should be subtracted.

For two finite sets A1 and A2

| A1 ∪ A2 |= |A1 |+ | A2| – |A1 ∩ A2|

Have a look at these examples:

Example 1 :

How many numbers between 10 and 100, including both, have the first digit as 3 and the last digit as 5?

Solution :

The numbers with 1st digit as 3 are from 30,31,32.…..39. So, the number of numbers with 1st digit as 3 is equal to 10. The numbers with last digit as 5 are 15,25,35…..95. Hence, the number of numbers with last digit as 5 is equal to 9.

So, is 10 + 9 = 19 the answer? No.

Because, we haven’t subtracted the common part between the two sets. We need to find the numbers who have both the first digit as 3 and the last digit as 5. Here, there’s only 1 such number, i.e. 35.

Therefore, the final answer = 10 + 9 - 1 = 18.

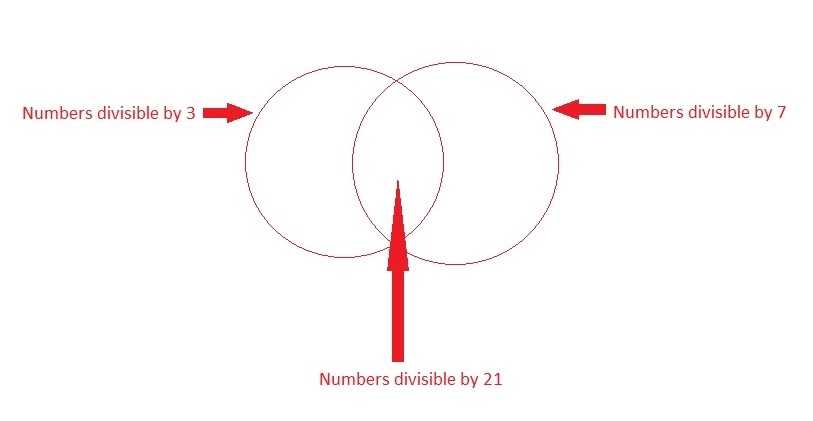

Example 2 :

How many numbers between 1 and 100, including both, are divisible by 3 and 7?

Solution :

Number of numbers divisible by 3 = 100 / 3 = 33.

Number of numbers divisible by 7 = 100 / 7 = 14.

Number of numbers divisible by 3 and 7 = 100 / (3 * 7) = 100 / 21 = 4.

Hence, number of numbers divisible by 3 or 7 = 33 + 14 - 4 = 43.

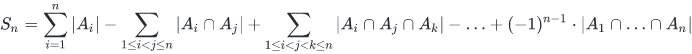

If there are more than two sets, the following general formula is applicable:

For a given set A of n sets A_1,A_2,....A_n, let S_nbe the number of ways to do the task :

Here, we can observe that odd number of terms are added while even number of terms are subtracted.

Actually, each subset of is considered exactly once in the formula, thus the formula for a set of n sets has 2^n components.

Problem :

Given two positive integers n and r . Count the number of integers in the interval [1,r] that are relatively prime to n (their GCD is 1).

Solution:

Let’s first find the number of integers between 1 and r that are not relatively prime with n ( i.e they share atleast one prime factor with n ).

So, the first step is to find the prime factors of n. Let the prime factors of n be p_i(i = 1...k )

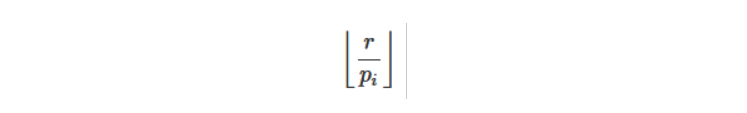

How many numbers in the interval [1,r] are divisible by p_i? The answer is :

However, if we simply sum these numbers, some numbers will be summarized several times (those that share multiple pias their factors). Therefore, it is necessary to use the inclusion-exclusion principle.

We will iterate over all 2ksubsets of pis, calculate their product and add or subtract the number of multiples of their product.

Below is the C++ implementation of the above idea:

// CPP program to count the

// number of integers in range

// 1-r that are relatively

// prime to n

#include <bits/stdc++.h>

using namespace std;

// function to count the

// number of integers in range

// 1-r that are relatively

// prime to n

int count (int n, int r) {

vector<int> p; // vector to store prime factors of n

// prime factorisation of n

for (int i=2; i*i<=n; ++i)

if (n % i == 0) {

p.push_back (i);

while (n % i == 0)

n /= i;

}

if (n > 1)

p.push_back (n);

int sum = 0;

for (int mask=1; mask<(1<<p.size()); ++mask) {

int mult = 1,

bits = 0;

for (int i=0; i<(int)p.size(); ++i)

// Check if ith bit is set in mask

if (mask & (1<<i)) {

++bits;

mult *= p[i];

}

int cur = r / mult;

if (bits % 2 == 1)

sum += cur; // if odd number of prime factors are selected

else

sum -= cur; // if even number of prime factors are selected

}

return r - sum;

}

// Driver Code

int main()

{

int n=30;

int r=30;

cout << count(n, r);

return 0;

}

//OUTPUT : 22

Study Material for Inclusion-Exclusion

Combinatorics

PERMUTATIONS :

● Permutation: It is the different arrangements of a given number of elements taken one by one, or some, or all at a time.

● For example, if we have two elements A, B and C, then there are 6 possible permutations, ABC, ACB, BAC, BCA, CAB, CBA.

Basic Formula for permuting r items from a collection of n items is represented in mathematics by nPr. The mathematical formula for the same is:

Formula for Permutations of r elements of n elements:

Permutations with repetitions :

If we have n objects, p1 of one kind, p2 of another kind, p3 are another kind and rest are different.

Then the number of permutations for this condition are,

Permutations = n! / (p1! + p2! + p3! + …)

Circular Permutations :

Circular permutation is the total number of ways in which n distinct objects can be arranged around a fix circle. It is of two types:

Clockwise and Anticlockwise orders are different:

Permutations = (n−1)!

Clockwise and Anticlockwise orders are same.

Permutations = (n−1)!/2!

COMBINATIONS :

● Combination: It is the different selections of a given number of elements taken one by one, or some, or all at a time.

● For example, if we have two elements A and B, then there is only one way select two items, we select both of them.

Basic Formula for selecting r items from a collection of n items is represented in mathematics by nCr. The mathematical formula for the same is:

Remember in combination the order of the combination does not matter at all. It is more about the distinct items we are choosing from the set of given items (there is no repetition here in the n items as of now

Here are some useful formulas to use in combination:

● nCr = nCn-r

● nCn = nC0 = 1

Useful Links Combinatorics

Probability

As we all know by now, Probability is how likely something is about to happen.

Let’s denote an event by A, for which we need to find the probability of how likely the event is going to happen. We denote this by using P(A).

And mathematically, P(A) = Number of outcomes where A happens / Total outcomes.

Basic probability calculations

Complement of A : Complement of an event A means not A. Probability of complement event of A means the probability of all the outcomes in sample space other than the ones in A.

P(Ac) = 1 − P(A).

Union and Intersection : The probability of intersection of two events A and B is P(A∩B). When event A occurs in union with event B then the probability together is defined as P(A∪B) = P(A) + P(B) − P(A∩B) which is also known as the addition rule of probability.

Mutually exclusive : Any two events are mutually exclusive when they have non-overlapping outcomes i.e. if A and B are two mutually exclusive events then, P(A∩B) = 0. From the addition rule of probability

P(A∪B) = P(A) + P(B)

as A and B are disjoint or mutually exclusive events.

Independent : Any two events are independent of each other if one has zero effect on the other i.e. the occurrence of one event does not affect the occurrence of the other. If A and B are two independent events then, P(A∩B) = P(A) ∗ P(B).

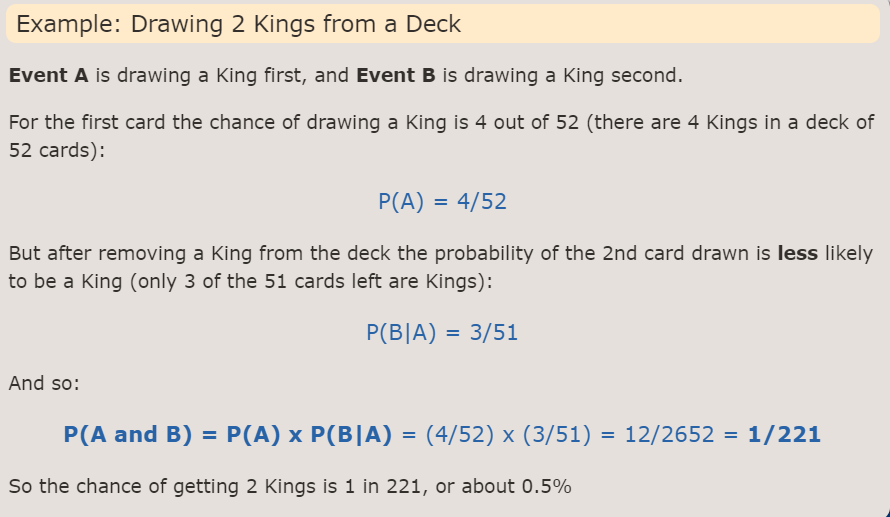

Conditional Probability

The conditional probability of an event B is the probability that the event will occur given the knowledge that an event A has already occurred. This probability is written P(B|A), notation for the probability of B given A. In the case where events A and B are independent (where event A has no effect on the probability of event B), the conditional probability of event B given event A is simply the probability of event B, that is P(B).

If events A and B are not independent, then the probability of the intersection of A and B (the probability that both events occur) is defined by P(A and B) = P(A)P(B|A).

From this definition, the conditional probability P(B|A) is easily obtained by dividing by P(A):

Binomial Distribution

To get started first we need to learn about something called Bernoulli Trial. Bernoulli Trial is a random experiment with only two possible, mutually exclusive outcomes. And each Bernoulli Trial is independent of the other.

To better understand stuff, let’s see an example.

Suppose we toss a fair coin three times. And we need to find the probability of two heads in all the possible outcomes.

Now, we can see that the probability of getting heads is ½, and tails is also ½, for a fair coin. And here all the tosses of the coin are independent to each other. So, we may say that the single tossing of the coin is a Bernoulli Trial, with the two possible outcomes of heads and tails. Let p denote the probability of getting heads in a fair coin toss. And the other possibility of getting tails is denoted by q. We can see that, p = ½ and q = ½.

The coin is tossed three times, so we have to select two events out of three, where heads will occur. And the total events are three. So, the number of ways to select them is 3C2. And the so can denote the total probability as = 3C2 p2q

Now let’s generalise the approach to problems like these involving Bernoulli Trials.

We saw that the Bernoulli Trial involves two mutually exclusive outcomes. Now for convenience they are often labelled as, “success” and “failure”. The binomial distribution is used to obtain the probability of observing x successes in N trials, with the probability of success on a single trial denoted by p. The binomial distribution assumes that p is fixed for all trials.

Study Material for Probability

LEVEL-1: INITIATED

Number Theory

GCD

GCD(Greatest Common Divisor) or HCF(Highest Common Factor) of 2 integers a and b refers to the greatest possible integer which divides both a and b.

GCD plays a very important part in solving several types of problems(both easy and difficult) which makes it a very important topic for Competitive Programming.

Algorithms to find GCD:

Brute Force Approach:

An approach is possible to start from the smaller number and iterate to 1. As soon as we find a number that divides both a and b, we can return it as the result.

Time complexity: O(min(a,b))

Space complexity: O(1)

Euclidean Algorithm:

The Euclidean Algorithm for calculating the GCD of two numbers builds on several facts:

If we subtract the smaller number from the larger number then the GCD is not changed.

i.e,gcd(a,b)=gcd(b,a-b)[considering a>=b]

If a is 0 and b is non-zero, the GCD is by definition b(or vice versa). If both are zero, then the GCD is undefined; but we can consider it to be zero, which gives us a very basic rule:

If one of the numbers is 0, then the GCD is the other number.

We can build upon these facts and further define GCD as(since the algorithm terminates as soon as one of the numbers become 0):

gcd(a,b)=gcd(b,a%b)

//Recursive approach

int gcd_recursive (int a, int b) {

if (b == 0)

return a; //if one term is 0, then the other one is GCD

else

return gcd_recursive (b, a % b);

}

//Iterative Approach

int gcd_iterative (int a, int b) {

while (b) { //loop continues until b is non-zero

a %= b;

swap(a, b);

}

return a;

}

Time complexity: O(log(min(a,b)))

Space complexity(Iterative): O(1)

Space complexity(Recursive): O(log(min(a,b))) [Stack space required for recursive function calls]

L.C.M:

LCM (Least Common Multiple) of 2 integers u and v refers to the smallest possible integer which is divisible by both u and v.

//function to calculate lcm

int lcm (int a, int b) {

return a / gcd(a, b) * b;

}

LCM also has a very interesting relation with GCD:

u*v=lcm(u,v)*gcd(u*v)

or, lcm(u,v)=(u*v)/gcd(u,v)

[provided u and v both are not zero]

Important Properties:

1. gcd(a,b)=gcd(b,a)

2. gcd(a,b,c)=gcd(a,gcd(b,c))=gcd(gcd(a,b),c)=gcd(b,gcd(a,c))

3. Depending on the parity of a and b, the following cases may arise:

(a). If both the numbers are even, then we can say,

gcd(2*a,2*b)=2*gcd(a,b)

(b). If only one of the numbers is even, while the other is odd,

gcd(2*a,b)=gcd(a,b) [b is odd]

(c). If both numbers are odd,

gcd(a,b)=gcd(b,a−b) [provided a>=b]

Extended Euclidean Algorithm:

The Extended Euclidean Algorithm allows us to find a linear combination of a and b which results in the GCD to a and b.

i,e, a*x+b*y=gcd(a,b) [x,y are integer coefficients]

e.g, a=15, b=35

Therefore,

gcd(a,b)=5

x=-2

y=1

Since, 15*(-2)+35*1=5

Another important topic, Linear Diophantine Equations, will also build upon this algorithm.

Algorithm:

The key factor in understanding the algorithm is figuring out how the coefficients (x,y) change during the change from the function call of gcd(a,b) to gcd(b,a%b) [i.e, following the recursive implementation].

Let us assume we have the coefficients (x1,y1) for (b,a%b);

∴ b*x1+(a%b)*y1=gcd(b,a%b)........(i)

We finally want to find the pair (x,y) for (a,b);

∴ a*x+b*y=gcd(a,b)........(ii)

Again, we know from the rules of modulus:

a%b=a-floor(a/b)*b........(iii)

[floor() function represents the mathematical floor function]

Therefore from equations (i),(ii) and (iii), we can say:

x=y1

y=x1-y1*floor(a/b)

//Recursive implementation

int extended_euclid_recursive(int a, int b, int& x, int& y) {

if (b == 0) {

x = 1;

y = 0;

return a;

}

int x1, y1;

int gcd = extended_euclid_recursive(b, a % b, x1, y1);

x = y1;

y = x1 - y1 * (a / b);

return gcd;

}

//Iterative implementation

int extended_euclid_iterative(int a, int b, int& x, int& y) {

x = 1, y = 0;

int x1 = 0, y1 = 1, a1 = a, b1 = b;

while (b1) {

int q = a1 / b1;

tie(x, x1) = make_tuple(x1, x - q * x1);

tie(y, y1) = make_tuple(y1, y - q * y1);

tie(a1, b1) = make_tuple(b1, a1 - q * b1);

}

return a1;

}

Study Material for GCD

Sieve

What is Sieve:

Sieve of Eratosthenes is an algorithm for finding all the prime numbers in a segment in range 1 to n in

0(n log(log n)) operations

Native approach:

#include <bits/stdc++.h>

using namespace std;

int main()

{

int n,flag=0;

cin>>n;

vector<int> primes;

for(int i=2;i<=n;i++)

{

flag=0;

for(int j=2;j<i;j++)

if(i%j==0)

{

flag=1;

break;

}

if(flag==0)primes.push_back(i);

}

for(int i=0;i<primes.size();i++)cout<<primes[i]<<endl;

}

we iterate the loop from 1 to n and for each number, we check whether it is prime or not.

The time complexity is 0(n*n)

#include <bits/stdc++.h>

using namespace std;

int main()

{

int n,flag=0;

cin>>n;

vector<int> primes;

for(int i=2;i<=n;i++)

{

flag=0;

for(int j=2;j*j<=i;j++)

if(i%j==0)

{

flag=1;

break;

}

if(flag==0)primes.push_back(i);

}

for(int i=0;i<primes.size();i++)cout<<primes[i]<<endl;

}

A better approach of the above solution:

We can check the divisors up to sqrt(i) since one of the divisors will be smaller than or equal to sqrt(i).

The time complexity of the code is 0(n*sqrt(n))

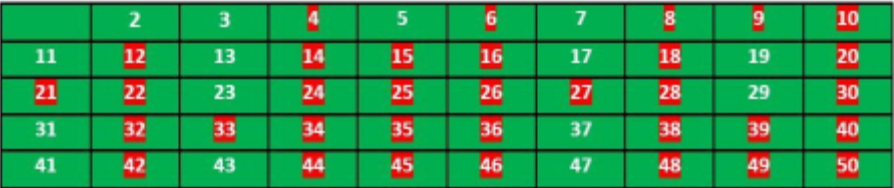

USING SIEVE:

In the sieve algorithm, we create an integer array of length n+1(for numbers from 1 to n) and mark all of them as prime. Then we iterate from 2 till square root of n. We mark all proper multiples of 2 (since 2 is the smallest prime number) as composite. A proper multiple of a number num is a number greater than num and divisible by num. Then we find the next number that hasn't been marked as composite, in this case, it is 3. This means 3 is prime, and we mark all proper multiples of 3 as composite and we continue this procedure.

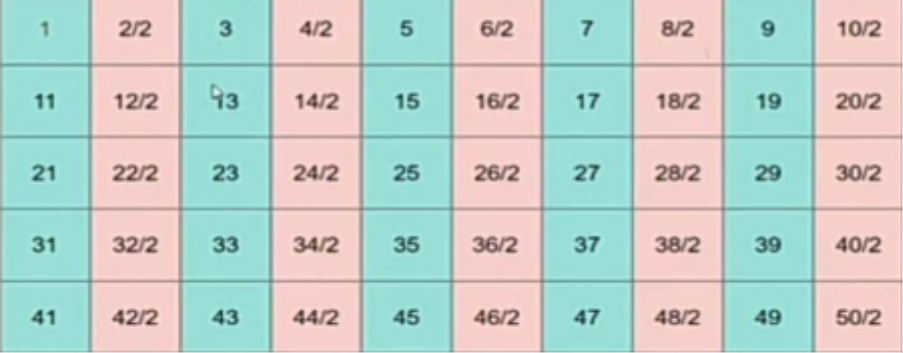

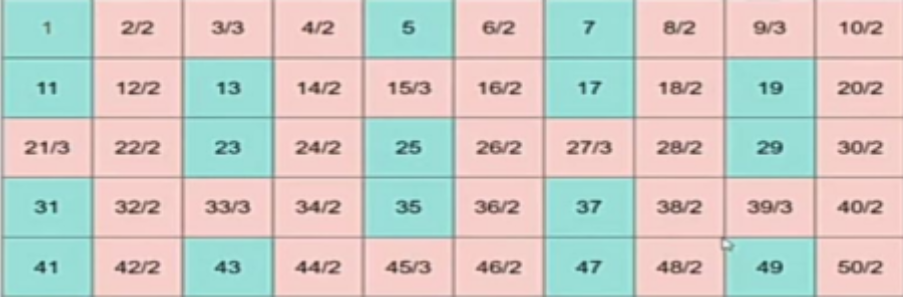

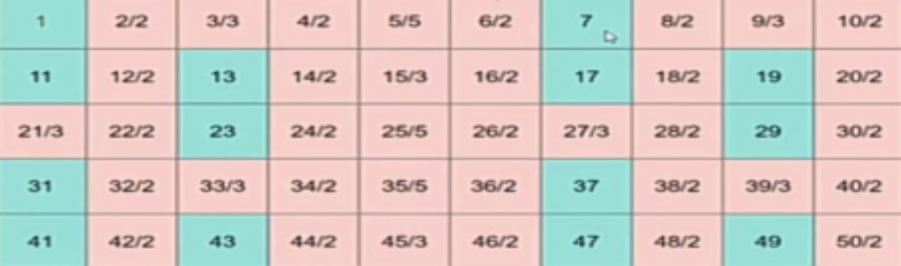

For n=50, this is the list of primes we are going to get

#include <bits/stdc++.h>

using namespace std;

int main()

{

int n;

cin>>n;

vector<int> prime(n+1, 1);

prime[0] = prime[1] = 0;

for (int i = 2; i * i <= n; i++) {

if (prime[i]==1) {

for (int j = i * i; j <= n; j += i)

prime[j] = 0;

}

}

for(int i=1;i<=n;i++)if(prime[i]==1)cout<<i<<endl;

}

The time complexity of the above code is 0(n*log(log n))

In the above code, we can reduce the number of operations performed by the algorithm by stopping checking for even numbers as all even numbers (except 2) are composite numbers for we can check for odd numbers only.

#include <bits/stdc++.h>

using namespace std;

int main()

{

int n;

cin>>n;

vector<int> prime(n+1, 1);

prime[0] = prime[1] = 0;

for (int i = 3; i * i <= n; i+=2) {

if (prime[i]==1) {

for (int j = i * i; j <= n; j += i)

prime[j] = 0;

}

}

if(n>=2)

cout<<2<<endl;//as 2 is a prime number

for(int i=3;i<=n;i+=2)if(prime[i]==1)cout<<i<<endl;

}

PRIME FACTORIZATION USING SIEVE:

For finding the prime factors of numbers using sieve first we generate an array and initialize elements with their position, then we perform sieve operation; we mark all the multiples of 2 as 2, multiples of 3 as 3, and so on.

Then for finding prime factors we simply run a loop till n is greater than equal to 1, print the factor stored in ar[n], and then divide n by ar[n].

#include<bits/stdc++.h>

using namespace std;

vector<int>ar(100000,0);

void sieve(int maxn)

{

for(int i=0;i<=maxn;i++)ar[i]=i;

for(int i=2;i*i<=maxn;i++)

{

if(ar[i]==i)

{

for(int j=i*i;j<=maxn;j+=i)

ar[j]=i;}

}

}

int main()

{ sieve(100000);

int t;

cin>>t;

while(t--)

{ int n;

cin>>n;

while(n>=1)

{ cout<<ar[n]<<endl;

if(n==1)break;

n/=ar[n];

}

}

}

SEGMENTED SIEVE

The idea of a segmented sieve is to divide the range [0..n-1] in different segments and compute primes in all segments one by one. This algorithm first uses Simple Sieve to find primes smaller than or equal to √(n). Below are steps used in Segmented Sieve.

1. Use Simple Sieve to find all primes up to the square root of ‘n’ and store these primes in an array “prime[]”. Store the found primes in an array ‘prime[]’.

2. we need to find all prime numbers in a range [L, R] of small size (e.g., R−L+1≈1e7), where R can be very large (e.g.10^12)

To solve such a problem, we can use the idea of the Segmented sieve. We pre-generate all prime numbers up to sqrt(R) and use those primes to mark all composite numbers in the segment [L, R].

vector<bool> segmentedSieve(long long L, long long R) { // generate all primes up to sqrt(R)

long long lim = sqrt(R);

vector<bool> mark(lim + 1, false);

vector<long long> primes;

for (long long i = 2; i <= lim; ++i) {

if (!mark[i]) {

primes.emplace_back(i);

for (long long j = i * i; j <= lim; j += i)

mark[j] = true;

}

}

vector<bool> isPrime(R - L + 1, true);

for (long long i : primes)

for (long long j = max(i * i, (L + i - 1) / i * i); j <= R; j += i)

isPrime[j - L] = false;

if (L == 1)

isPrime[0] = false;

return isPrime;

}

//Time complexity of this approach is O((R-L+1)loglog(R)+sqrt(R)loglog(sqrt(R)))

Study Material for Sieve

LDE

Linear Diophantine Equations

Introduction:

A General Diophantine equation is a polynomial equation, having two(or more) integral variables i.e. if a solution of the equation exists, it possesses integral values.

A linear Diophantine equation is a Diophantine equation of degree 1 i.e. each integral variable in the equation is either of degree 0 or degree 1.

General form:

A Linear Diophantine Equation(in two variables) is an equation of the general form:

ax + by = c

Where a,b,c are integral constants and x,y are integral variables.

Homogeneous Linear Diophantine equation:

A Homogeneous Linear Diophantine Equation(in two variables) is an equation of the general form:

ax + by = 0

Where a,b are integral constants and x,y are the integral variables.

By looking at the equation we can easily observe that x=0 and y=0 is a solution to the equation. This is called the Trivial solution.

Though more solutions can exist for the same.

Condition for the solution to exist:

For a linear diophantine equation, the integral solution exists if and only if:

c%gcd(a,b)=0

i.e. c must be divisible by the greatest common divisor of a and b. This is because

Let, a = gcd(a,b).A, where A is an integral divisor of a. Similarly, b = gcd(a,b).B.

Therefore the Linear Diophantine equation can be written as:

gcd(a,b).A + gcd(a,b).B = c

gcd(a,b).(A + B) = c

This means that c must be a multiple of gcd(a,b).

For the Homogeneous Linear Diophantine equation, a solution always exists i.e. the trivial solution.This is because c=0, and we know 0 is divisible by every integer.

Finding a solution:

A solution can easily be found using the Extended Euclidean algorithm.

.

ax1 + by1 = gcd(a,b)

a.x1.c + by1.c = gcd(a,b).c (multiplying both sides by c)

a.x1.{c/gcd(a,b)} + by1.{c/gcd(a,b)} = c

a.{x1.(c/gcd(a,b))} + b.{y1.(c/gcd(a,b))} = c ….. (1)

Now, by comparing the equation (1) with the general Linear Diophantine equation we get:

x0 = x1 . c/gcd(a,b)

y0 = y1 . c/gcd(a,b)

Where x0 and y0 is a solution of the Linear Diophantine equation.

Implementation:

Program to find a solution of a given Linear Diophantine equation.

#include<bits/stdc++.h>

using namespace std;

// function to find the gcd of a,b and coefficient x,y

//using Extended Euclidean Algorithm

int extended_gcd(int a, int b, int &x, int &y)

{

if(a == 0)// base case

{

x = 0;

y = 1;

return b;// here b will be the gcd.

}

int x1, y1;

int g = extended_gcd(b%a, a, x1, y1);

x = y1 - (b/a) * x1;

y = x1;

return g;

}

// function to find a solution of the Diophantine equation if any

int one_solution(int a, int b, int c, int &x, int &y)

{

int g = extended_gcd(a, b, x, y);

if(c%g == 0) // condition for a solution to exist

{

//solution to the Diophantine equation

x = x * c/g;

y = y * c/g;

return 1; // Atleast 1 solution exist

}

return 0; // no solution exists

}

int main()

{

int a,b,c,x,y;

cin>>a>>b>>c;

if(one_solution(a,b,c,x,y))// if solution exists

{

cout<< "Solution are: "

cout<<x<< " "<<y;

}

else// if no solution exists

{

cout<< "Solution does not Exist";

}

}

Time Complexity - O(log(max(a,b)))

Auxiliary Space - O(1)

Finding all solutions:

Let, C0 be any arbitrary integer.

Add and subtract {C0.a.b/gcd(a,b)} in the general Linear Diophantine equation we get,

a.x0 + b.y0 + C0.a.b/gcd(a,b) - C0.a.b/gcd(a,b) = c

(Where x0 and y0 is a solution of the Linear Diophantine)

a.x0 + C0.a.b/gcd(a,b)+ b.y0 - C0.a.b/gcd(a,b) = c

a.(x0 + C0.b/gcd(a,b)) + b.(y0 - C0.a/gcd(a,b)) = c ….. (2)

Comparing equation (2) with general equation of Linear Diophantine equation

we get,

x = x0 + C0.b/gcd(a,b)

y = y0 - C0.a/gcd(a,b)

Where x,y are the solutions of the Linear Diophantine equation.

Study Material for LDE

Reference Study - CP-Algorithms

Diophantine_equation - Wikipedia

Linear-Diophantine-Equations GFG

Linear-Diophantine-Equations-one-equation Brilliant

CRT

Chinese Remainder Theorem

Introduction:

Given set of n equations:

x % q[1] = r[1] or x ≡ r[1] (mod q[1])

x % q[2] = r[2]

x % q[3] = r[3]

.

.

.

x % q[n] = r[n]

Where, q[1], q[2]... q[n] are pairwise coprime positive integers i.e, for any 2 numbers q[i] and q[j] (i≠j)

GCD(q[i] , q[j]) = 1

And r[1].. r[n] are remainders when x is divided by q[1]... q[n] respectively .So, according to the Chinese Remainder theorem, there always exists a solution of a given set of equations and the solution is always unique modulo N, where

N = q[1].q[2]...q[n]

Finding solution:

Assume, C0 be a solution to the set of equations i.e C0 satisfies each and every equation belonging to the set. Then Xi = C0 ± K is also a solution to the set of equations if

K = LCM(q[1],q[2],q[3]....q[n]

LCM denotes the least common multiple of q[1] ,q[2]... q[n].

But ,we know that q[1] ,q[2]...q[n] are pairwise coprime therefore,

LCM(q[1],q[2]...q[n]) = q[1].q[2].q[3]...q[n]

Therefore, positive solutions to the set of equations are

Xi = C0 + K

Where C0 is the minimum positive solution that exists. So to find the solutions of the given set of equations we just need to find the minimum positive solution that exists.

Finding minimum positive solution:

Brute Force Approach:

A simple solution to find the minimum positive solution is to iterate from 1 till we find first value C0 such that all n equations are satisfied.

Implementation: function to find minimum positive solution.

int Find_x(int q[] ,int r[] ,int n)

{

int x=1;// initializing minimum positive solution

bool flag;

while(1)// we iterate till we get a solution

{

flag=true;

for(int i=0;i<n;i++)

{

if(x % q[i] == r[i] )//check if x gives remainder r[i] when divided by q[i]

continue;

flag=false;// if remainder is not r[i], this x cannot be the answer

break;

}

if(flag) // if x satisfies all n equations ,then we have found the answer.

break;

x++; // check for further values

}

return x;

}

Efficient Approach:

The general solution of the set of equation looks like

Xi = r[1].y[1].inv[1] + r[2].y[2].inv[2] . . . . . r[n].y[n].inv[n] .... (1)

Where,

y[1] = q[2].q[3].q[4]....q[n] or y[1] = N/q[1]

y[2] = q[1].q[3].q[4]....q[n]

.

.

.

y[n] = q[1].q[2].q[3].....q[n-1]

and,

inv[1] = y[1]-1 mod q[1]

inv[2] = y[2]-1 mod q[2]

.

.

.

inv[n] = y[n]-1 mod q[n]

We, know that the Chinese Remainder theorem states that there always exists a solution and this solution is always unique modulo N.So, to find the minimum positive solution we take (Xi mod N) where N = q[1].q[2]....q[n]. Therefore

x = Xi %N or

x = (r[1].y[1].inv[1] + r[2].y[2].inv[2] . . . . . r[n].y[n].inv[n]) % N …. (2)

Implementation: function to find minimum positive solution.

// function to find gcd and coefficients using Extended Euclidean Algorithm

int Extended_gcd(int a ,int b, int &x, int &y)

{

if(a==0)

{

x = 0;

y = 1;

return b;

}

int x1 ,y1;

int g = Extended_gcd(b % a, a, x1, y1);

x = y1 - (b / a) * x1;

y = x1;

return g;

}

//function to find inverse of val w.r.t m

int inv(int val ,int m)

{

int x ,y;

int g = Extended_gcd(val ,m ,x ,y);

if(x < 0)

x = (x % m + m) % m;

return x;

}

int Find_x(int q[] ,int r[] ,int n) //function to find minimum positive solution

{

int x=0 ,N=1;

for(int i=0;i<n;i++)

N *= q[i]; // compute N = q[0].q[1]..q[n-1]

for(int i=0;i<n;i++)

{

int y = N / q[i];

x = ( x + r[i] * y * inv(y ,q[i]) ) % N; //computing x according to equation 2

}

return x;

}

Study Material for CRT

Chinese Remainder Theorem - cp-algorithms reference

Chinese Remainder Theorem - Wikipedia Reference

Chinese Remainder Theorem - GFG

Chinese Remainder Theorem - Codechef

Chinese Remainder Theorem - Youtube reference

Practice Problem 1

Practice Problem 2

Practice Problem 3

Fermat's Theorem

FERMAT’S LITTLE THEOREM

Fermat’s little theorem states that if p is a prime number, then for any integer a, the number ap – a is an integer multiple of p.

ap ≡ a (mod p), where p is prime.

If a is not divisible by p, Fermat’s little theorem is equivalent to the statement that ap-1 -1 is an integer multiple of p.

ap - 1 ≡ 1 (mod p)

1. Applications of Fermat’s little theorem

Modular multiplicative Inverse

If we know p is prime, then we can also use Fermats’s little theorem to find the inverse.

ap-1 ≡ 1 (mod p)

If we multiply both sides with a-1, we get

a-1 ≡ a p-2 (mod p)

// C++ program to find modular multiplicative inverse

//using fermat's little theorem.

#include <bits/stdc++.h>

using namespace std;

// Modular Exponentiation to compute x^y under modulo p

int power(int x, unsigned int y, unsigned int p)

{

if (y == 0)

return 1;

int res = power(x, y / 2, p) % p;

res = (res * res) % p;

return (y % 2 == 0) ? res : (x * res) % p;

}

// Function to find modular inverse of a under modulo p

void modInverse(int a, int p)

{

if (__gcd(a, p) != 1)

cout << "Inverse doesn't exist";

else {

// If a and p are relatively prime, then

// modulo inverse is a^(p-2) mode p

cout << "Modular multiplicative inverse is "

<< power(a, p - 2, p);

}

}

// Driver Program

int main()

{

int a = 3, p = 11;

modInverse(a, p);

return 0;

}

2. Primality Test

In Fermat Primality Testing, k random integers are selected as the value of a (where all k integers follow gcd(a,k) = 1). If the statement of Fermat's Little Theorem is accepted for all these k values of a for a given number N, then N is said as a probable prime.

// C++ program to check if N is prime.

#include <bits/stdc++.h>

using namespace std;

/* Iterative Function to calculate (a^n)%p in O(logy) */

int power(int a, unsigned int n, int p)

{

int res = 1; // Initialize result

a = a % p; // Update 'a' if 'a' >= p

while (n > 0)

{

// If n is odd, multiply 'a' with result

if (n & 1)

res = (res*a) % p;

// n must be even now

n = n>>1; // n = n/2

a = (a*a) % p;

}

return res;

}

// If n is prime, then always returns true, If n is

// composite then returns false with high probability

// Higher value of k increases probability of correct

// result.

bool isPrime(unsigned int n, int k)

{

// Corner cases

if (n <= 1 || n == 4) return false;

if (n <= 3) return true;

// Try k times

while (k>0)

{

// Pick a random number in [2..n-2]

// Above corner cases make sure that n > 4

int a = 2 + rand()%(n-4);

// Checking if a and n are co-prime

if (__gcd((int)n, a) != 1)

return false;

// Fermat's little theorem

if (power(a, n-1, n) != 1)

return false;

k--;

}

return true;

}

// Driver Program to test above function

int main()

{

int k = 3;

isPrime(11, k)? cout << " true\n": cout << " false\n";

isPrime(15, k)? cout << " true\n": cout << " false\n";

return 0;

}

Study Material for Fermat's Theorem

Compute nCr % p - Using Fermat's Theorem

GFG - Fermat's Little Theorem

Practice Problem 1

Practice Problem 2

Bitwise

Operations and Properties

Introduction to Bits

We usually work with data types such as int, char, float, etc., or even data structures( i.e generally on bytes level) but sometimes programmers need to work at bit level for various purposes such as encryption, data compression, etc.

Apart from that operations on bits are time efficient and are used for optimizing programs.

Bit Manipulation

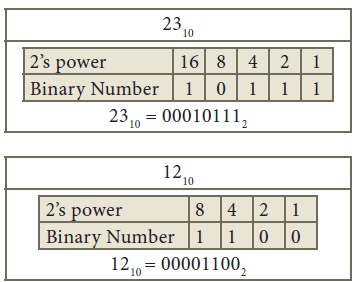

Any number can be represented bitwise

which is known as its binary form or base-2 form.

1 byte comprises 8 bits.

For example:

(21)10 = (10101)2 in binary form.

= 1*24 + 0*23 + 1*22 + 0*21 + 1*20

Suppose we use int to store 21 and we

know int is of 4 bytes so it will be using

32 bit representation with last five bits

as 10101.

Bitwise Operators

There are different operators that work on bits and are used for optimizing programs in terms of time.

Unary Operators

1. NOT (!) : Bitwise NOT is an unary operator that flips the bits of the number i.e., if the bit is 0, it will change it to 1 and vice versa.It gives the one’s complement of a number.

Example: - N = 5 = (101)2

~N = ~5 = ~(101)2 = (010)2 = 2

Binary Operators

1. AND (&) : Bitwise AND operates on two equal-length bit patterns. If both bits at the ith position of the bit patterns are 1, the bit in the resulting bit pattern is 1, otherwise 0.

Example:-

00001100 = (12)10

& 00011001 = (25)10

_________

00001000 = (8)10

2. OR ( | ) : Bitwise OR also operates on two equal-length bit patterns. If both bits at the ith position of the bit patterns are 0, the bit in the resulting bit pattern is 0, otherwise 1.

Example:-

00001100 = (12)10

| 00011001 = (25)10

_________

00011101 = (29)10

3. XOR ( ^ ) : Bitwise XOR also operates on two equal-length bit patterns. If both bits in the ith position of the bit patterns are 0 or 1, the bit in the resulting bit pattern is 0, otherwise 1.

Example:-

00001100 = (12)10

^ 00011001 = (25)10

_________

00010101 = (21)10

4. Left Shift ( << ): Left shift operator is a binary operator that shifts some number of bits, in the given bit pattern, to the left and appends 0 at the right end. Left shift is equivalent to multiplying the bit pattern with 2k.(say we are shifting bits by k-positions).

Example :-

Consider shifting 8 to the left by 1

(8)10 =(1010)2

5. Right Shift ( >> ): Right shift operator is a binary operator that shifts some number of bits, in the given bit pattern, to the right and appends 0 at the left end. Right shift is equivalent to dividing the bit pattern with 2k ( say we are shifting by k-positions ).

Example:-

Consider shifting 3 to the right by 1

(3)10 = (0011)2

Basics Operations on Bits

int x = 16;

if ( x & (1 << i) )

{

// i th bit is set

}

else

{

// i th bit is not set

}

1. Bitwise ANDing (Masking):- This is used for checking if the ith bit in the given bit pattern is set or not.

Example :-

Let x=12 and we have to check if the 3rd bit is set or not.

int x = 12;

x = x | (1 <<i );

2. Bitwise ORing:- This is used to set ith bit in the given bit pattern.

Example:-

Let x=12 and we have to set the 1st bit in x.

int x = 12;

x = x ^ (1 <<i );

3. Bitwise XORing :- This is used to toggle ith bit in the given bit pattern.

Example:-

Let x = 12 and we have to toggle 2nd bit in x.

Tricks with Bits

1. x ^ ( x & (x-1)) : Returns the rightmost 1 in binary representation of x.

(x & (x - 1)) will have all the bits equal to the x except for the rightmost 1 in x. So if we do bitwise XOR of x and (x & (x-1)), it will simply return the rightmost 1. Let’s see an Example:-

x = 10 = (1010)2 `

x & (x-1) = (1010)2 & (1001)2 = (1000)2

x ^ (x & (x-1)) = (1010)2 ^ (1000)2 = (0010)2

2. x & (-x) : Returns the rightmost 1 in binary representation of x

(-x) is the two’s complement of x. (-x) will be equal to one’s complement of x plus 1.

Therefore (-x) will have all the bits flipped that are on the left of the rightmost 1 in x. So x & (-x) will return rightmost 1.

Example:-

x = 10 = (1010)2

(-x) = -10 = (0110)2

x & (-x) = (1010)2 & (0110)2 = (0010)2

3. x | (1 << n) : Returns the number x with the nth bit set.

(1 << n) will return a number with only nth bit set. So if we OR it with x it will set the nth bit of x.

x = 10 = (1010)2 n = 2

1 << n = (0100)2

x | (1 << n) = (1010)2 | (0100)2 = (1110)2

Study Material for Bitwise

LEVEL-2: TRAINED

Searching

Linear Search

In the programming world, we often need to find out whether an element is present in the given data set or not.

Formally, we have an array and we are asked to find the index of an element ‘k’ in the array. And if it is not present, inform that.

The simplest way to answer this question is Linear Search.

In linear search, we traverse the entire array and check each element one by one.

int flag=0;

for(start to end of the array){

if(current_element is k){

cout<<“Required element is found at”<<current_index<<endl;

flag=1;

break;

}

}

if(flag==0){

cout<<”Required Element not present in the array”<<endl;

}

As one can easily see, in the worst case i.e. when the element is not present in the array, we are traversing the entire array. Hence the time complexity of this operation is O(n).

Study Material for Linear Search

Binary Search

Binary Search is the most useful search algorithm. It has many applications too, mainly due to its logarithmic time complexity. For example, if there are 109 numbers and you want to search for a particular element, using binary search you can do this task in just around 32 steps!

The only thing that one must keep in mind is that binary search works only on sorted set of elements.

The main idea of the algorithm can be explained as follows:

Let we have a sorted array of n elements, and we want to search for k.

Let's compare k with the middle element of the array.

If k is bigger, it must lie on the right side of the middle element.

Else if it is smaller, it must lie on the left side of the middle element.

This means, in one step, we reduced the “search space” by half. We can continue this halfing-process recursively until we find the required element. That's it.

Remember, we could do this because the elements were sorted.

Here, in each step we half the length of the interval. In the worst case, we continue the process till the length becomes 1. Hence, the time complexity of binary search is O(log2 N), where N is the initial length of interval.

// Function to find the index of an element k in a sorted array arr.

int binarySearch(int arr[], int l, int r, int k){

while (l <= r) {

int mid = l + (r - l) / 2;

// Check if x is present at mid

if (arr[mid] == k)

return m; // Found the element.

// If x greater, ignore left half

if (arr[mid] < k)

l = mid + 1;

// If x is smaller, ignore right half

else

r = mid - 1;

}

// if we reach here, then the element was not present.

return -1;

}

We can implement binary search algorithm either iteratively or recursively.

Iterative Implementation

int binarySearch(int arr[], int l, int r, int k)

{

if (r >= l) {

int mid = l + (r - l) / 2;

// If the element is present at the middle

if (arr[mid] == k)

return mid;

// If the element is smaller than mid, continue searching in the left half.

if (arr[mid] > k)

return binarySearch(arr, l, mid - 1, x);

// Else continue searching in the right half.

return binarySearch(arr, mid + 1, r, x);

}

// We reach here when an element is not present in the array.

return -1;

}

Recursive implementation

Sometimes a situation arises where we can’t calculate the answer directly from given data due to too many possibilities, but we can have a way to know if a number is possible as a solution or not. i.e. we ask a number and get a feedback as “yes” or “no”. And using the feedback we shorten our “search space”, and continue the search for our answer.

This technique is known as Binary Search the Answer.

Study Material for Binary Search

Lower Bound and Upper Bound

Lower Bound: Lower bound of a number in a sorted array is the first element in that array that is not smaller than the given number. STL has a useful inbuilt function

lower_bound(start_ptr, end_ptr, num) to carry out this task. It returns the pointer pointing to the required element. As this function uses binary search in its working, its time complexity is O(log n).

Upper Bound: Upper bound of a number in a sorted array is the number that is just higher than the given number. STL provides an inbuilt function for this too:

upper_bound(start_ptr, end_ptr, num). Similar to lower_bound(), this function also returns the pointer pointing to the required element, and its time complexity is O(log n).

Note: These functions return the end pointer if the required element is not present in the array.

Note: If there are multiple occurrences of the required element in the array, these functions return the pointer to the first occurrence of it.

vector<int> Ar={1,1,2,4,4,5,6,7};

auto l=lower_bound(Ar.begin(),Ar.end(),4);

// return pointer to index 3

auto u=upper_bound(Ar.begin(),Ar.end(),4);

// returns pointer to index 5

cout<<(*l)<<endl;

cout<<(*u)<<endl;

Output:

4

5

Study Material for Lower and Upper Bound

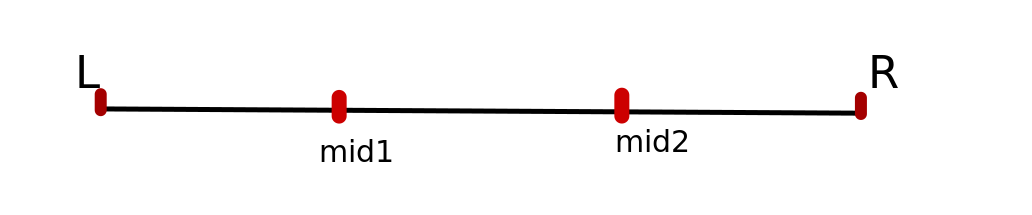

Ternary Search

Just like the Binary search algorithm, Ternary search is also a divide and conquer algorithm. And the array needs to be sorted to perform ternary search.The only difference is, instead of dividing the segment into two parts, here we divide it into three parts, and find in which part our key element lies.

1.First, we compare the key with the element at mid1. If found equal, we return mid1.

2.If not, then we compare the key with the element at mid2. If found equal, we return mid2.

3.If not, then we check whether the key is less than the element at mid1. If yes, then recur to the first part.

4.If not, then we check whether the key is greater than the element at mid2. If yes, then recur to the third part.

5.If not, then we recur to the second (middle) part

int ternary_search(int l,int r, int k)

{

if(r>=l)

{

int mid1 = l + (r-l)/3;

int mid2 = r - (r-l)/3;

if(ar[mid1] == k)

return mid1;

if(ar[mid2] == k)

return mid2;

if(k<ar[mid1]) // First part

return ternary_search(l,mid1-1,k);

else if(k>ar[mid2]) // Third part

return ternary_search(mid2+1,r,k);

else // Second part

return ternary_search(mid1+1,mid2-1,k);

}

return -1;

}

Study Material for Ternary Search

Sorting

Selection Sort

The idea behind this algorithm is pretty simple. We divide the array into two parts: sorted and unsorted. The left part is a sorted subarray and the right part is an unsorted subarray. Initially, the sorted subarray is empty and the unsorted array is the complete given array.

We perform the steps given below until the unsorted subarray becomes empty:

(Assuming we want to sort it in non-decreasing order)

Pick the minimum element from the unsorted subarray.

Swap it with the leftmost element of the unsorted subarray.

Now the leftmost element of the unsorted subarray becomes a part (rightmost) of the sorted subarray and will not be a part of the unsorted subarray.

void selection_sort (int Arr[ ], int n)

int minimum; // temporary variable to store the position of minimum element

// reduces the effective size of the array by one in each iteration.

for(int i = 0; i < n-1 ; i++){

// element at index i will be swapped

// finding the smallest element of unsorted part:

minimum = i ;

// gives the effective size of the unsorted array .

for(int j = i+1; j < n ; j++ ) {

if(A[ j ] < A[ minimum ]) {

minimum = j ;

}

}

// putting the minimum element on its proper position.

swap ( A[ minimum ], A[ i ]) ;

}

}

Lets try to understand the algorithm with an example: Arr[ ] = {69, 55, 2, 22, 1}.

At first our array looks like this:{69,55,2,22,1}

Find the minimum element in Arr[0...4] and place it at beginning

{1,55,2,22,69}

Find the minimum element in Arr[1...4] and place it at beginning of Arr[1...4]

{1,2,55,22,69}

Find the minimum element in arr[2...4] and place it at beginning of arr[2...4]

{1,2,22,55,69}

Find the minimum element in arr[3...4] and place it at beginning of arr[3...4]

{1,2,22,55,69}

Time Complexity: O(n2) as there are two nested loops.

Note:

Selection sort never requires more than n swaps.

It is an in-place sorting algorithm.

Its stability depends on implementation.

void selection_sort (int Arr[ ], int n)

int minimum; // temporary variable to store the position of minimum element

// reduces the effective size of the array by one in each iteration.

for(int i = 0; i < n-1 ; i++){

// element at index i will be swapped

// finding the smallest element of unsorted part:

minimum = i ;

// gives the effective size of the unsorted array .

for(int j = i+1; j < n ; j++ ) {

if(A[ j ] < A[ minimum ]) {

minimum = j ;

}

}

// putting the minimum element on its proper position.

swap ( A[ minimum ], A[ i ]) ;

}

}

Lets try to understand the algorithm with an example: Arr[ ] = {69, 55, 2, 22, 1}.

At first our array looks like this:{69,55,2,22,1}

Find the minimum element in Arr[0...4] and place it at beginning

{1,55,2,22,69}

Find the minimum element in Arr[1...4] and place it at beginning of Arr[1...4]

{1,2,55,22,69}

Find the minimum element in arr[2...4] and place it at beginning of arr[2...4]

{1,2,22,55,69}

Find the minimum element in arr[3...4] and place it at beginning of arr[3...4]

{1,2,22,55,69}

Time Complexity: O(n2) as there are two nested loops.

Note:

Selection sort never requires more than n swaps.

It is an in-place sorting algorithm.

Its stability depends on implementation.

Study Material for Selection Sort

Bubble Sort

Bubble sort, sometimes referred to as sinking sort, is based on the idea of repeatedly comparing pairs of adjacent elements and then swapping their positions if they exist in the wrong order. In one iteration of the algorithm the smallest/largest element will result at its final place at end/beginning of an array. So in some sense movement of an element in an array during one iteration of bubble sort algorithm is similar to the movement of an air bubble that raises up in the water, hence the name.

Lets try to understand the algorithm with an example: Arr[ ] = {9,2,7,5}.

At first our array looks like this:

{9,2,7,5}

In the first step, we compare the first 2 elements, 2 and 9, As 9>2 , we swap them.

{2,9,7,5}

Next, we compare 9 and 7 and similarly swap them.

{2,7,9,5}

Again, we compare 9 and 5 and swap them.

{2,7,5,9}

Now as we have reached the end of the array, the second iteration starts.

In the first step, we compare 2 and 7. As 7>2, we need not swap them.

{2,7,5,9}

In the Next step, we compare 7 and 5 and swap them.

{2,5,7,9}

In this way, the process continues.

In this example, there will not be any more swaps as the array is sorted after the steps shown above.

void bubble_sort( int A[ ], int n ) {

int temp;

for(int i = 0; i< n-1; i++) {

// (n-1) because the last element will already be sorted

for(int j = 0; j < n-i-1; j++) {

//(n-i-1) because remaining i elements are already sorted

if(A[ j ] > A[ j+1] ){ // here swapping of positions is being done.

temp = A[ j ];

A[ j ] = A[ j+1 ];

A[ j + 1] = temp;

}

}

}

}

Observe that, the above function always runs in O(n2) time even if the array is sorted. It can be optimized by stopping the algorithm if the inner loop didn’t cause any swap.

Note:

>Bubble sort is a stable, in place sorting algorithm.

>Bubble sort does not have any practical application. Yet, it is very much necessary to learn about it as it represents the basic foundations of sorting.

Study Material for Bubble Sort

Insertion Sort

Insertion sort is the sorting mechanism where the sorted array is built having one item at a time. The array elements are compared with each other sequentially and then arranged simultaneously in some particular order. The analogy can be understood from the style we arrange a deck of cards in our hand. This sort works on the principle of inserting an element at a particular position, hence the name Insertion Sort.

To sort an array of size n in ascending order:

1: Iterate from arr[1] to arr[n] over the array.

2: Compare the current element (key) to its predecessor.

3: If the key element is smaller than its predecessor, compare it to the elements before. Move the greater elements one position up to make space for the swapped element.

Lets try to understand the algorithm with an example: Arr[ ] = {69, 55, 2, 22, 1}.

At first our array looks like this:

{69,55,2,22,1}

Let us loop from index 1 to index 4

For index=1, Since 55 is smaller than 69, move 69 and insert 55 before 69

{55,69,2,22,1}

For index=2, 2 is smaller than both 55 and 69. So shift 55 and 69 to right and insert 2 before them.

{2,55,69,22,1}

For index=3, 22 is smaller than both 55 and 69. So shift 55 and 69 to right and insert 22 before them.

{2,22,55,69,1}

For index=4, 22 is smaller than both 55 and 69. So shift 55 and 69 to right and insert 22 before them.

{1,2,22,55,69}

void insertionSort(int Arr[], int n)

{ int i, key, j;

for (i = 1; i < n; i++)

{ key = Arr[i];

j = i - 1;

/* Move elements of arr[0..i-1], that are greater than key,

to one position ahead of their current position */

while (j >= 0 && Arr[j] > key)

{ Arr[j + 1] = Arr[j];

j = j - 1;

}

Arr[j + 1] = key;

}

}

me Complexity: O(n2)

Note:

It is an in-place, stable sorting algorithm.

Insertion sort is used when the number of elements is small. It can also be useful when the input array is almost sorted, only a few elements are misplaced in complete big array.

Study Material for Insertion Sort

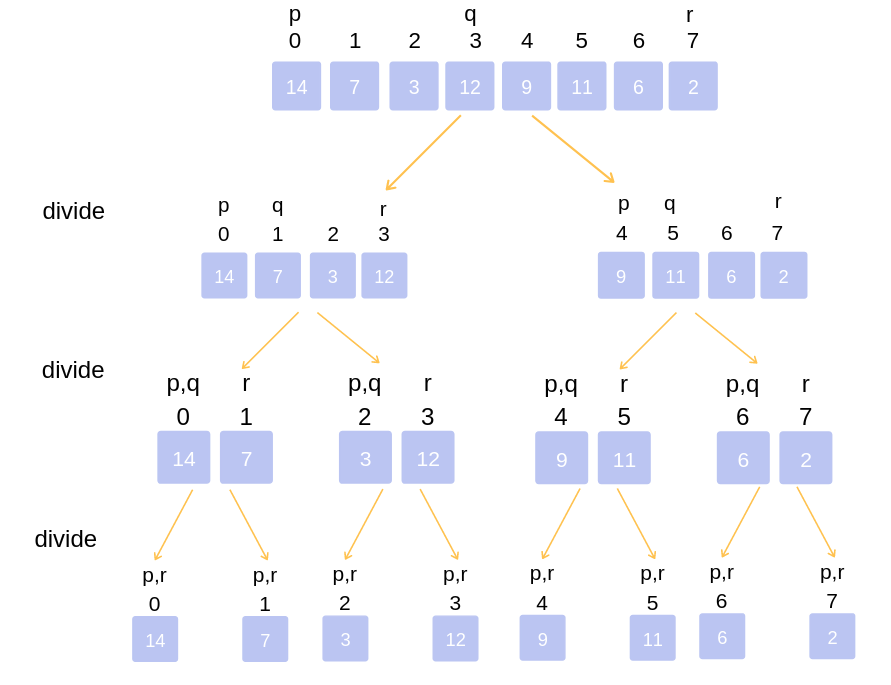

Merge Sort

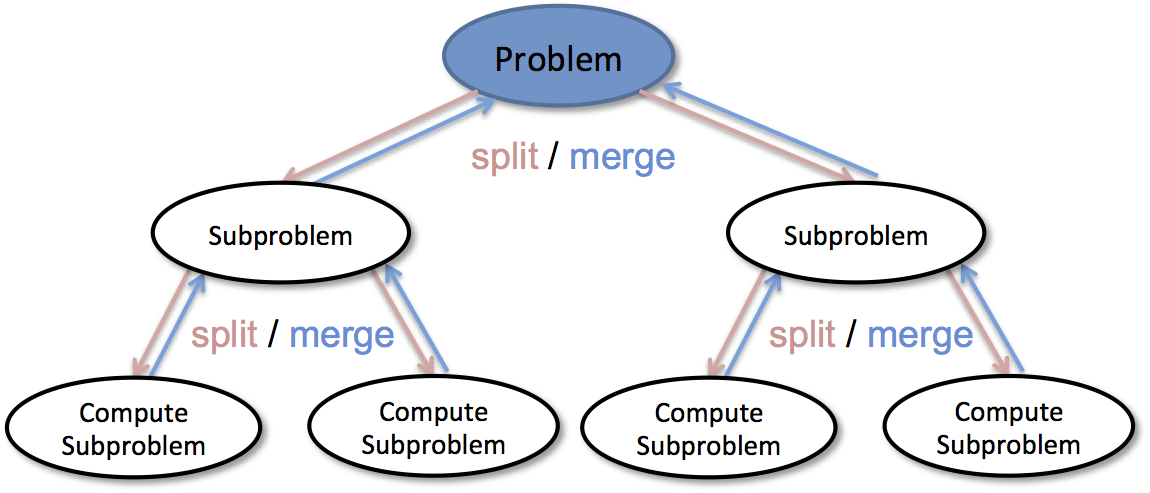

Merge sort algorithm works on the principle of Divide and Conquer.

It is one of the most efficient sorting algorithms. It is one of the most respected algorithms due to many reasons. Also, it is a classic example of divide and conquer technique.

The main idea behind the algorithm is to divide the given array into two parts recursively until it becomes a single element, trivial to sort. The most important part of the algorithm is to merge two sorted arrays into a single array.

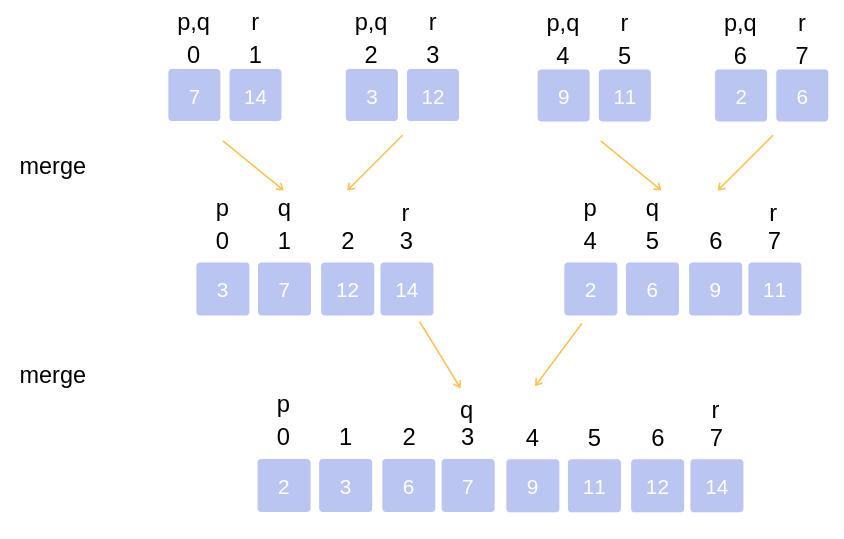

Let us first understand how to merge two sorted arrays:

1.We will take two pointers which will point to the starting of the two arrays initially.

2.Then we will take a new, empty auxiliary array with length equal to the sum of lengths of the two sorted arrays.

3.Now, we will compare the elements pointed by our two pointers. And insert the smaller element into the new array and increment that pointer (Assuming we are sorting in non-decreasing order).

4.We continue this process till any of the pointers reach the end of the respective array. Then we insert the remaining elements of the other array in the new array one by one.

(Have a look at the merge function in the following implementation of merge sort)

Now let's understand the merge sort algorithm:

>First of all, we call the mergesort function on the first half and second half of the array.

>Now the two halves are sorted, the only thing to do is to merge them. So we call the merge function.

>We do this process recursively, with the base case that, when the array consists of just one element, it is already sorted and we can return the function call from there.

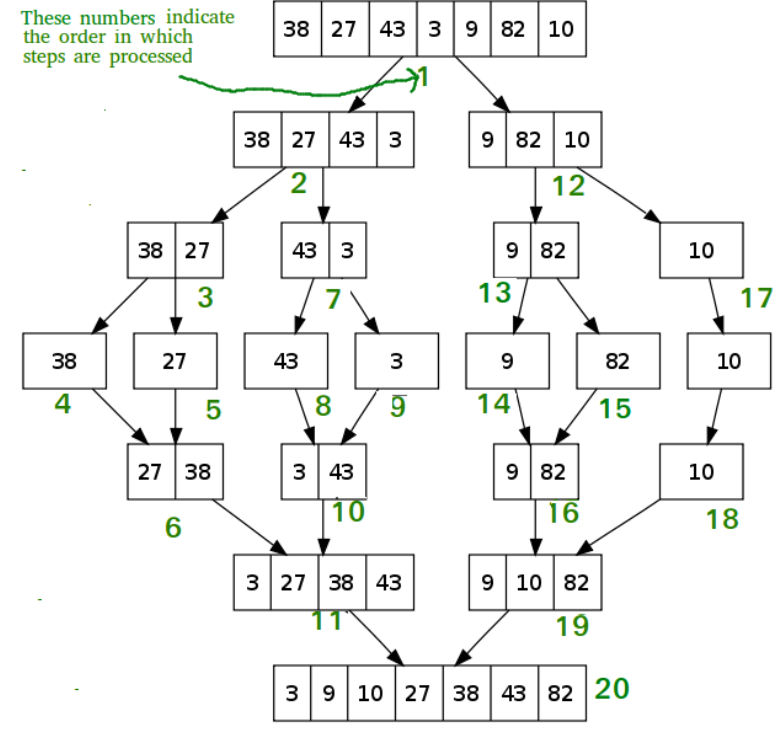

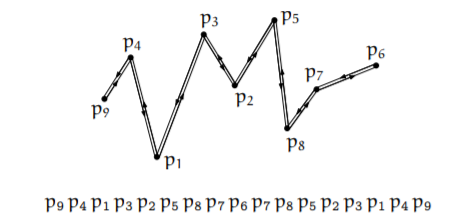

It's time for an example:

>Let's take an array, Arr[ ]={14,7,3,12,9,11,6,2}.

>Following figure shows each step of dividing and merging the array.

>In the figure, segment (p to q) is the first part and segment (q+1 to r) is the second part of the array at each step.

// First subarray is arr[l..m]

// Second subarray is arr[m+1..r]

void merge(int Arr[ ], int l, int m, int r)

{

int n1 = m - l + 1;

int n2 = r - m;

// Create temp arrays, for convenience

int L[n1], R[n2];

// Copy data to temp arrays L[] and R[]

for (int i = 0; i < n1; i++)

L[i] = arr[l + i];

for (int j = 0; j < n2; j++)

R[j] = arr[m + 1 + j];

// Merge the temp arrays back into arr[l..r]

// Initial index of the subarrays

int i = 0, j=0;

// Initial index of merged subarray

int k = l;

while (i < n1 && j < n2) {

if (L[i] <= R[j]) {

arr[k] = L[i];

i++;

}

else {

arr[k] = R[j];

j++;

}

k++;

}

while (i < n1) { // Copy the remaining elements of L[ ], if there are any

arr[k] = L[i];

i++;

k++;

}

while (j < n2) { // Copy the remaining elements of R[ ], if there are any

arr[k] = R[j];

j++;

k++;

}

}

// l is for left index and r is right index of the sub-array of arr to be sorted

void mergeSort(int Arr[],int l,int r){

if(l>=r){

return; //returns recursively

}

int m = (l+r-1)/2;

mergeSort(Arr,l,m);

mergeSort(Arr,m+1,r);

merge(Arr,l,m,r);

}

Time Complexity: The list of size is divided into a max of (log N) parts, and the merging of all sublists into a single list takes O(N) time,hence the worst case run time of this algorithm is O(N logN).

Note:

It is not an in-place sorting algorithm.

Study Material for Merge Sort

STL-sort()

Competitive programmers love C++, STL is one of the reasons. STL i.e. Standard Template Library provides us many in-built useful functions, sort() is one of them. In other words, sort() is one of the most useful STL functions.

Basically, sort() sorts the elements in a range, with time complexity O(N log2N) where N is the length of that range.

It generally takes two parameters , the first one being the point of the array/vector from where the sorting needs to begin and the second parameter being the point up to which we want the array/vector to get sorted. The third parameter is optional and can be used when we want to sort according to some custom rule. By default it sorts in non-decreasing order.

#include <bits/stdc++.h>

using namespace std;

int main()

{

int arr[] = { 10, 5,18,99, 6, 7 };

int n = 6; // size of array

/*Here we take two parameters, the beginning of the

array and the length n upto which we want the array to

be sorted*/

sort(arr, arr + n);

cout << "\nArray after sorting using "

"default sort is : \n";

for (int i = 0; i < n; ++i)

cout << arr[i] << " ";

return 0;

}

Output:

Array after sorting using default sort is :

5 6 7 10 18 99

In case we want to sort the complete vector, we should pass vector.begin() and vector.end() as parameters. Further, the vector need not be of basic data types. It can be a vector of pairs, vector of vectors or vector of vectors of pairs etc. In those cases, by default it sorts the objects lexicographically. We can sort it in any particular order using a comparator function.